Low code coverage of the spark pipeline is caused due to missing unit test cases for sources and sinks. Most of our spark scala pipeline’s code coverage was below 70%. We want it to be in the range of 85–95%.

Spark supports multiple batches and stream sources, sinks like

- CSV

- JSON

- Parquet

- ORC

- Text

- JDBC/ODBC connections

- Kafka

Limitation of writing test cases for spark read and write brings down overall code coverage and therefore a low overall quality score for the pipeline. As read and write are an omnipresent part of the data pipeline, the corresponding lines of code are a significant percentage of the total lines of code.

Let’s look at the step-by-step guide to improving code quality measures of spark scala pipelines.

Below is a summary of mocking concepts

Mocking in unit test case writing is to test the behaviors and logic of written code

Mock is used for behavior verification where it gives full control over the object and its method

A stub is used to hold data that are mostly limited in nature and used to return during unit test cases

Spy is used for partial mocking where only select behavior needs to be mocked and the remaining needs to be the original behavior of an object

Let’s get into spark specifics

Maven based dependencies

<a href=“https://medium.com/media/67e46e98e30692a907e22fee53c7e320/href”>https://medium.com/media/67e46e98e30692a907e22fee53c7e320/href</a>Let’s use an example of a spark class where the source is Hive and the sink is JDBC.

DummySource.scala reads a table from Hive, applies a filter, and then writes to a JDBC table.

We skip transform where other logic is applied as that can be tested using stub<a href=“DummySource.scala · GitHub”>https://medium.com/media/c1a7d896c30aeb0ea7784887a5f56d41/href</a>

We would not need to write unit test cases where the code connects to the source or sink. So here we want to mock the read and write behavior of spark.

Before we look at the solution, it is better to get ourselves familiarised with Spark DataFrameReader and DataFrameWriter

Since we are going to Mock the below two classes it’s important to know all the methods/behaviors

- Spark 3.2.1 ScalaDoc - org.apache.spark.sql.DataFrameReader

- Spark 3.2.1 ScalaDoc - org.apache.spark.sql.DataFrameWriter

Mocking Read

<a href=“oneLIne.scala · GitHub”>https://medium.com/media/239def94c69868e3baebd573c5548440/href</a>The objective here is to avoid making the connection to the source and still get a DataFrame so that our filter logic is unit tested. So we start by mocking the SparkSession

<a href=“one.scala · GitHub”>https://medium.com/media/8ee1a31057772acfabc1a4f2eac49cbe/href</a>SparkSession.read returns org.apache.spark.sql.DataFrameReader, so now we will mock DataFrameReader

<a href=“two.scala · GitHub”>https://medium.com/media/64a8d05ba77ff81493b05a41f660cdea/href</a>We have all the required mock objects. It is time to control the behaviors. We need mockSpark to return mockDataFrameReader when spark.read is invoked

<a href=“three.scala · GitHub”>https://medium.com/media/376d0ab841f9c4306af07811dfac1329/href</a>One more behavior has to be added where a DataFrame is returned when spark.read.table is invoked, for that we create a stub object

<a href=“four.scala · GitHub”>https://medium.com/media/4cd8dbb0b0bb49cec0bd2a14797b941f/href</a>Now let’s add the required behavior

<a href=“five.scala · GitHub”>https://medium.com/media/1b27bd776731364bca16a1aba8ccc121/href</a>Let us complete our test by adding an assertion.

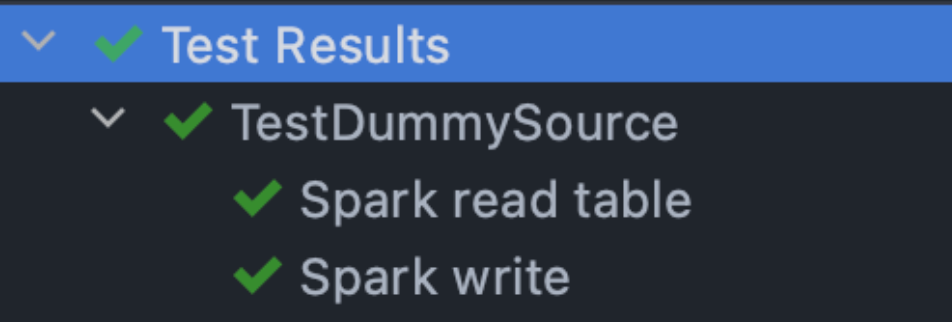

<a href=“TestRead.scala · GitHub”>https://medium.com/media/466f48888065d3693cfead77bbb3afc4/href</a>We have completed our unit test case by mocking Hive read for spark.

Mocking Write

We will build on what we have learned so far. Start with mock

Now add behavior

<a href=“seven.scala · GitHub”>https://medium.com/media/5d869f08d09fc91a4ac31a8e809b8dfc/href</a>doNothing is used for void methods<a href=“TestSparkWrite.scala · GitHub”>https://medium.com/media/6241980e5a71c25f46eac5d83cbfdbf8/href</a>

Kafka Stream Mocking

The streaming source needs a different set of classes to be mocked like org.apache.spark.sql.streaming.DataStreamReader and org.apache.spark.sql.streaming.DataStreamWriter

Here is the sample test case using the above steps

- Mock

- Behavior

- Assertion

Conclusion

Mocking helped us achieve greater than 80% code coverage and is recommended for all JVM-based spark pipelines.

Happy Learning

Spark: Mocking Read, ReadStream, Write and WriteStream was originally published in Walmart Global Tech Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.

Article Link: Spark Unit Test Cases Using Mocking for kafka/hive/orc/jdbc/rdbms | Walmart Global Tech Blog