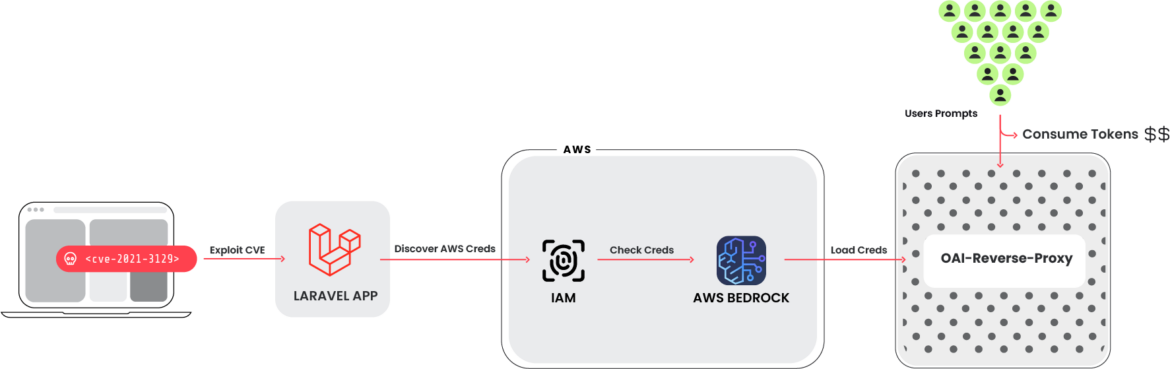

The Sysdig Threat Research Team (TRT) recently observed a new attack that leveraged stolen cloud credentials in order to target 10 cloud-hosted large language model (LLM) services, known as LLMjacking. The credentials were obtained from a system running a vulnerable version of Laravel (CVE-2021-3129), which is a popular target. Attacks against LLM-based Artificial Intelligence (AI) systems have been discussed often, but mostly around prompt abuse and altering training data. Attackers have other ideas about abusing these systems, including selling access while the victim pays the bill.

Once initial access was obtained, they exfiltrated cloud credentials and pivoted to the cloud environment, where they attempted to access local LLM models hosted by cloud providers; in this instance, a local Claude (v2/v3) LLM model from Anthropic was targeted. If left unchecked, this type of attack could result in over $46,000 of expenses per day for the victim.

The end goal of this attack remains unclear, but we discovered evidence of a reverse proxy for LLMs being used to provide access to the compromised accounts, suggesting a financial motivation. Another possibility is the access could be leveraged in order to extract LLM training data.

Additional Targets

We were able to discover the tools that were generating the requests used to invoke the models during the attack. This revealed a broader script that was able to check credentials for 10 different AI services in order to learn which were useful for their purposes. These services include:

- AI21 Labs, Anthropic, AWS Bedrock, Azure, ElevenLabs, MakerSuite, Mistral, OpenAI, OpenRouter, and GCP Vertex AI

The attackers are looking to gain access to a large amount of LLM models across different services. No legitimate LLM queries were actually run during the verification phase. Instead, just enough was done to figure out what the credentials were capable of and any quotas. In addition, logging settings are also queried where possible. This is done to avoid detection when using the compromised credentials to run their prompts.

As cloud adoption accelerates, there is a growing need to manage security risks within these dynamic environments.

Our five steps outline how you can set up the security strategy as you move to the cloud.

Background

Hosted LLM Models

All major cloud providers, including Azure Machine Learning, GCP’s Vertex AI, and AWS Bedrock, now host large language model (LLM) services. These platforms provide developers with easy access to a variety of popular models used in LLM-based AI. As illustrated in the screenshot below, the user interface is designed for simplicity, enabling developers to quickly start building applications.

These models, however, are not enabled by default. Instead, a request needs to be submitted to the cloud vendor in order to run them. For some models, it is an automatic approval; for others, like third-party models, a small form must be filled out. Once a request is made, AWS usually enables access pretty quickly. As was reported in a previous article by Invictus, the attacker requested production Simple Email Service (SES) access to be enabled and it was approved. The requirement to make a request is often more of a speed bump for attackers rather than a blocker, and shouldn’t be considered a security mechanism.

Once enabled and permissions are set up, a simple command like this can use the model with a given prompt.

aws bedrock-runtime invoke-model –model-id anthropic.claude-v2 –body ‘{“prompt”: “\n\nHuman: story of two dogs\n\nAssistant:”, “max_tokens_to_sample” : 300}’ –cli-binary-format raw-in-base64-out invoke-model-output.txt

Claude LLM

Created by Anthropic, Claude is a series of LLM models similar to GPT. The latest version, Claude 3, was released on March 4, 2024. It has three flavors increasing in requirements and capabilities: Haiku, Sonnet, and Opus. Claude shares many of the same capabilities as other LLMs, such as code generation, content analysis and generation, and math. According to several benchmarks, it is an advancement over GPT4 and other competitors.

We observed the attackers calling the InvokeModel API with Claude v2, v3 Sonnet, and v3 Haiku models, that are available on AWS Bedrock.

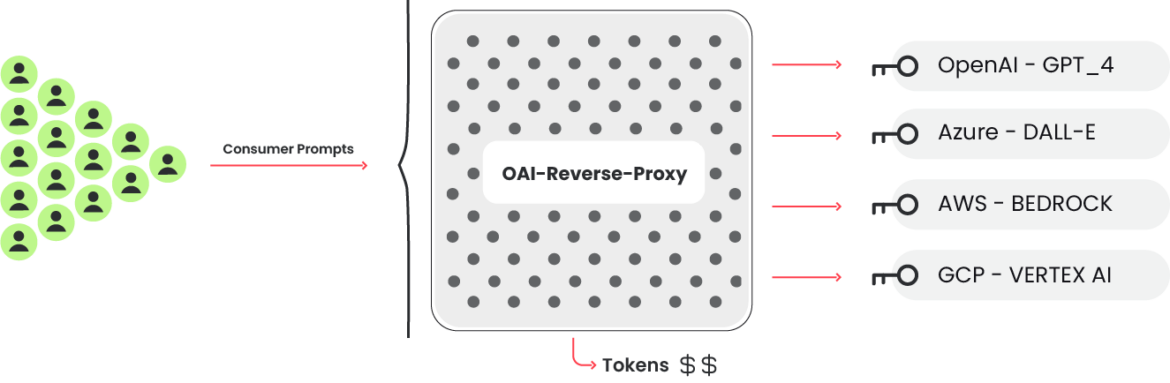

LLM Reverse Proxy

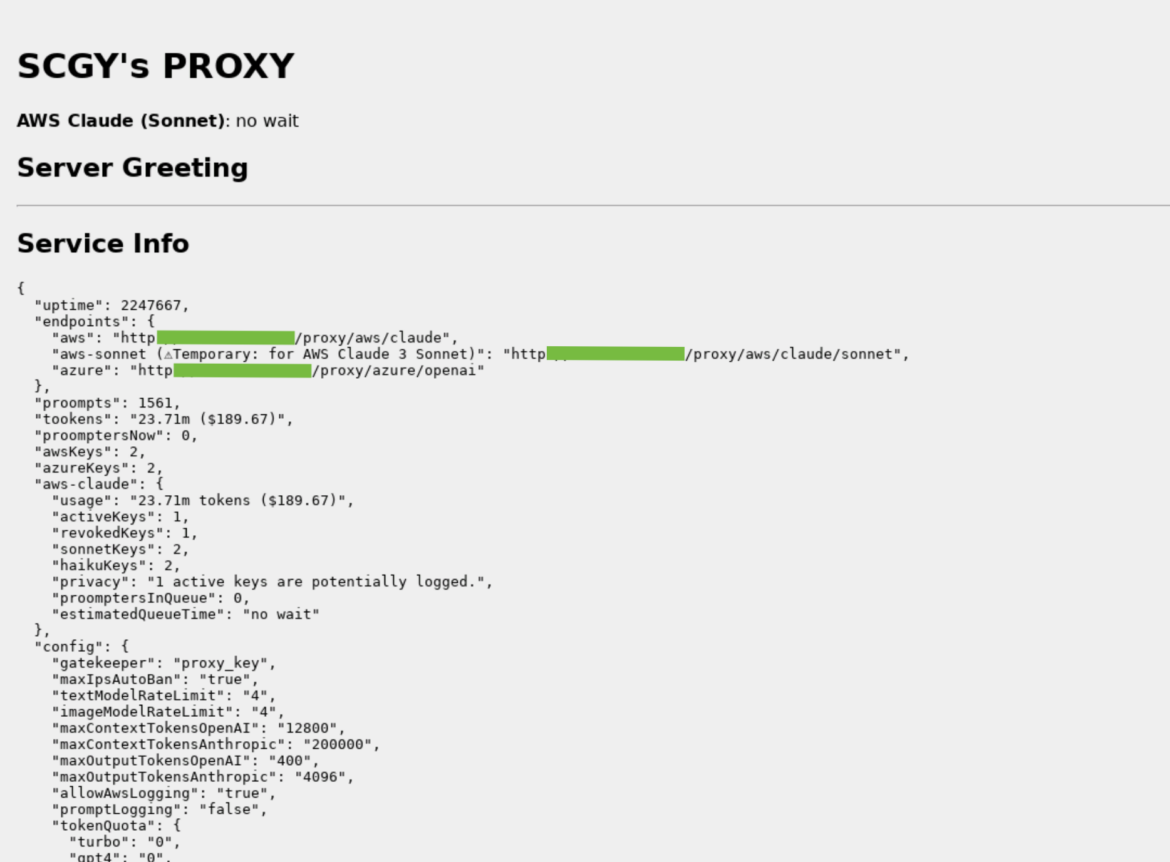

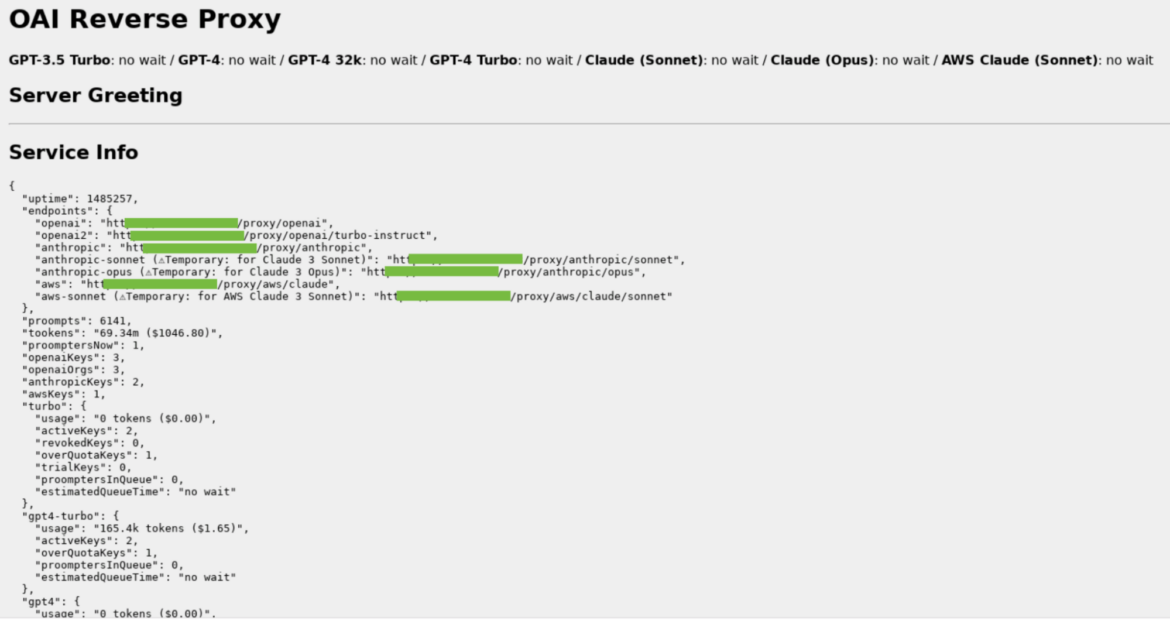

The key checking code that verifies if credentials are able to use targeted LLMs also makes reference to another project: OAI Reverse Proxy. This open source project acts as a reverse proxy for LLM services. Using software such as this would allow an attacker to centrally manage access to multiple LLM accounts while not exposing the underlying credentials, or in this case, the underlying pool of compromised credentials. During the attack using the compromised cloud credentials, a user-agent that matches OAI Reverse Proxy was seen attempting to use LLM models.

The image above is an example of an OAI Reverse Proxy, which we found running on the Internet. There is no evidence that this instance is tied to this attack in any way, but it does show the kind of information it collects and displays. Of special note are the token counts (“tookens”), costs, and keys which are potentially logging.

This example shows an OAI reverse proxy instance, which is setup to use multiple types of LLMs. There is no evidence that this instance is involved with the attack.

If the attackers were gathering an inventory of useful credentials and wanted to sell access to the available LLM models, a reverse proxy like this could allow them to monetize their efforts. There is currently no evidence to prove that this was the attacker’s goal, but it seems to be a likely option since the reverse proxy is being used.

Technical Analysis

In this technical breakdown, we explore how the attackers navigated an AWS cloud environment to carry out their intrusion. By employing seemingly legitimate API requests within the cloud environment, they cleverly tested the boundaries of their access without immediately triggering alarms. The example below demonstrates a strategic use of the InvokeModel API call logged by CloudTrail. Although the attackers issued a valid request, they intentionally set the max_tokens_to_sample parameter to -1. This unusual parameter, typically expected to trigger an error, instead served a dual purpose. It confirmed not only the existence of access to the LLMs but also that these services were active, as indicated by the resulting ValidationException. A different outcome, such as an AccessDenied error, would have suggested restricted access. This subtle probing reveals a calculated approach to uncover what actions their stolen credentials permitted within the cloud account.

InvokeModel

The InvokeModel call is logged by CloudTrail and an example malicious event can be seen below. They sent a legitimate request but specified “max_tokens_to_sample” to be -1. This is an invalid error which causes the “ValidationException” error, but it is useful information for the attacker to have because it tells them the credentials have access to the LLMs and they have been enabled. Otherwise, they would have received an “AccessDenied” error.

{

"eventVersion": "1.09",

"userIdentity": {

"type": "IAMUser",

"principalId": "[REDACTED]",

"arn": "[REDACTED]",

"accountId": "[REDACTED]",

"accessKeyId": "[REDACTED]",

"userName": "[REDACTED]"

},

"eventTime": "[REDACTED]",

"eventSource": "bedrock.amazonaws.com",

"eventName": "InvokeModel",

"awsRegion": "us-east-1",

"sourceIPAddress": "83.7.139.184",

"userAgent": "Boto3/1.29.7 md/Botocore#1.32.7 ua/2.0 os/windows#10 md/arch#amd64 lang/python#3.12.1 md/pyimpl#CPython cfg/retry-mode#legacy Botocore/1.32.7",

"errorCode": "ValidationException",

"errorMessage": "max_tokens_to_sample: range: 1..1,000,000",

"requestParameters": {

"modelId": "anthropic.claude-v2"

},

"responseElements": null,

"requestID": "d4dced7e-25c8-4e8e-a893-38c61e888d91",

"eventID": "419e15ca-2097-4190-a233-678415ed9a4f",

"readOnly": true,

"eventType": "AwsApiCall",

"managementEvent": true,

"recipientAccountId": "[REDACTED]",

"eventCategory": "Management",

"tlsDetails": {

"tlsVersion": "TLSv1.3",

"cipherSuite": "TLS_AES_128_GCM_SHA256",

"clientProvidedHostHeader": "bedrock-runtime.us-east-1.amazonaws.com"

}

}Example Cloudtrail log

Cloud service providers often stagger the rollout of new functionalities across their various geographic regions. This phased deployment strategy allows the vendors to manage scalability and ensure stability across their networks. Consequently, attackers exploiting these services must tailor their actions based on the availability of specific features in designated regions. In this instance, the attackers utilized the InvokeModel API call only in regions where the service had been enabled. The availability of different models and services can vary significantly by region, underscoring the need for a nuanced approach when navigating or securing multi-regional cloud architectures.

GetModelInvocationLoggingConfiguration

Interestingly, the attackers showed interest in how the service was configured. This can be done by calling “GetModelInvocationLoggingConfiguration,” which returns S3 and Cloudwatch logging configuration if enabled. In our setup, we used both S3 and Cloudwatch to gather as much data about the attack as possible.

{

"loggingConfig": {

"cloudWatchConfig": {

"logGroupName": "[REDACTED]",

"roleArn": "[REDACTED]",

"largeDataDeliveryS3Config": {

"bucketName": "[REDACTED]",

"keyPrefix": "[REDACTED]"

}

},

"s3Config": {

"bucketName": "[REDACTED]",

"keyPrefix": ""

},

"textDataDeliveryEnabled": true,

"imageDataDeliveryEnabled": true,

"embeddingDataDeliveryEnabled": true

}

}Example GetModelInvocationLoggingConfiguration response

Information about the prompts being run and their results are not stored in Cloudtrail. Instead, additional configuration needs to be done to send that information to Cloudwatch and S3. This check is done to hide the details of their activities from any detailed observations. OAI Reverse Proxy states it will not use any AWS key that has logging enabled for the sake of “privacy.” This makes it impossible to inspect the prompts and responses if they are using the AWS Bedrock vector.

Impact

In an LLMjacking attack, the damage comes in the form of increased costs to the victim. It shouldn’t be surprising to learn that using an LLM isn’t cheap and that cost can add up very quickly. Considering the worst-case scenario where an attacker abuses Anthropic Claude 2.x and reaches the quota limit in multiple regions, the cost to the victim can be over $46,000 per day.

According to the pricing and the initial quota limit for Claude 2:

- 1000 input tokens cost $0.008, 1000 output tokens cost $0.024.

- Max 500,000 input and output tokens can be processed per minute according to AWS Bedrock. We can consider the average cost between input and output tokens, which is $0.016 for 1000 tokens.

- Claude 2 is currently available in four regions.

Leading to the total cost: (500K tokens/1000 * $0.016) * 60 minutes * 24 hours * 4 regions = $46,080 / day

By maximizing the quota limits, attackers can also block the compromised organization from using Bedrock models legitimately. This can disrupt business operations that integrate Bedrock into automated processes.

Detection

Monitoring Cloudtrail and other logs can reveal suspicious or unauthorized activity. Using the AWS plugin for Falco or Sysdig Secure, the reconnaissance methods used during the attack can be detected, and a response can be started. For Sysdig Secure customers, this rule can be found in the Sysdig AWS Notable Events policy.

Falco rule:

- rule: Bedrock Model Recon Activity

desc: Detect reconaissance attempts to check if Amazon Bedrock is enabled, based on the error code. Attackers can leverage this to discover the status of Bedrock, and then abuse it if enabled.

condition: jevt.value[/eventSource]="bedrock.amazonaws.com" and jevt.value[/eventName]="InvokeModel" and jevt.value[/errorCode]="ValidationException"

output: A reconaissance attempt on Amazon Bedrock has been made (requesting user=%aws.user, requesting IP=%aws.sourceIP, AWS region=%aws.region, arn=%jevt.value[/userIdentity/arn], userAgent=%jevt.value[/userAgent], modelId=%jevt.value[/requestParameters/modelId])

priority: WARNINGMonitoring your organization’s LLM usage is also important. For AWS Bedrock, Cloudwatch and S3 can be set up to store additional data. Indeed, as shown previously, the CloudTrail log of InvokeModel does not contain the details about the prompt input and output.

The Bedrock settings allow users to enable model invocation logging easily. For CloudWatch, a log group and a role with proper permissions must be created. Similarly, logging into S3 needs a bucket as a destination. Also, to log model input or output data larger than 100kb or in binary format, one must explicitly specify an S3 destination for large data delivery. This data includes input and output images that are stored in the logs as Base64 strings.

The logs contain additional information about the tokens processed, as shown in the following example:

{

"schemaType": "ModelInvocationLog",

"schemaVersion": "1.0",

"timestamp": "[REDACTED]",

"accountId": "[REDACTED]",

"identity": {

"arn": "[REDACTED]"

},

"region": "us-east-1",

"requestId": "bea9d003-f7df-4558-8823-367349de75f2",

"operation": "InvokeModel",

"modelId": "anthropic.claude-v2",

"input": {

"inputContentType": "application/json",

"inputBodyJson": {

"prompt": "\n\nHuman: Write a story of a young wizard\n\nAssistant:",

"max_tokens_to_sample": 300

},

"inputTokenCount": 16

},

"output": {

"outputContentType": "application/json",

"outputBodyJson": {

"completion": " Here is a story about a young wizard:\n\nMartin was an ordinary boy living in a small village. He helped his parents around their modest farm, tending to the animals and working in the fields. [...] Martin's favorite subject was transfiguration, the art of transforming objects from one thing to another. He mastered the subject quickly, amazing his professors by turning mice into goblets and stones into fluttering birds.\n\nMartin",

"stop_reason": "max_tokens",

"stop": null

},

"outputTokenCount": 300

}

}Example S3 log

Moreover, CloudWatch alerts can be configured to handle suspicious behaviors. Several runtime metrics for Bedrock can be monitored to trigger alerts.

Recommendations

AWS provides a number of tools and recommendations to help prevent the cloud attacks described in this research. The AWS Security Reference Architecture offers best practices, which can be used to build your environment in a secure way from the start. Service Control Policies (SCP) should be used to centrally manage permissions and reduce the risk of over-permissioned accounts, potentially leading towards abuse of the services provided by AWS.

This attack could have been prevented in a number of ways, including:

- Vulnerability management to prevent initial access.

- Secrets management to ensure credentials are not stored in the clear where they can be stolen.

- CSPM/CIEM to ensure the abused account had the least amount of permissions it needed.

Conclusion

Stolen cloud and SaaS credentials continue to be a common attack vector. This trend will only increase in popularity as attackers learn all of the ways they can leverage their new access for financial gain. The use of LLM services can be expensive, depending on the model and the amount of tokens being fed to it. Normally, this would cause a developer to try and be efficient — sadly, attackers do not have the same incentive. Detection and response is critical to deal with any issues quickly.

IoCs

IP Addresses

83.7.139.184

83.7.157.76

73.105.135.228

83.7.135.97

The post LLMjacking: Stolen Cloud Credentials Used in New AI Attack appeared first on Sysdig.

Article Link: LLMjacking: Stolen Cloud Credentials Used in New AI Attack | Sysdig