In the dynamic realm of Kafka streaming, where data flows like a river, ensuring the reliability and functionality of your Kafka streams is paramount. Unit testing becomes the cornerstone of this process, providing a robust framework for validating the individual components that make up your Kafka streaming applications. In this blog post, we’ll explore the importance of unit testing in Kafka streaming, focusing specifically on Scala Spark code, and discuss strategies to fortify your data processing pipelines.

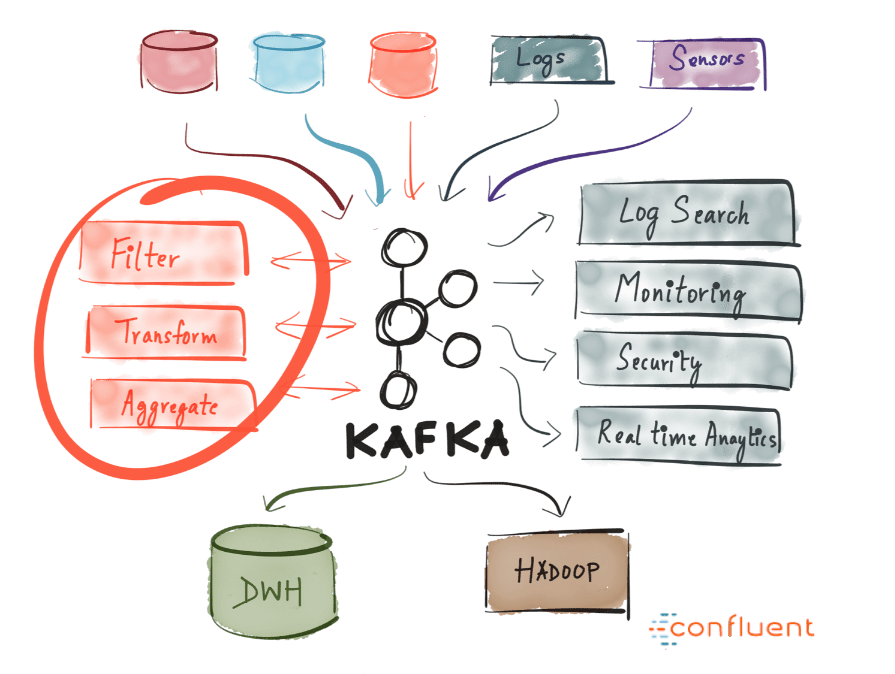

Photo Credits: Confluent

Photo Credits: ConfluentWhy is unit test needed?

Kafka streaming applications are intricate ecosystems with interconnected components that handle data ingestion, processing, and output. Unit testing allows developers to validate each of these components in isolation, ensuring they function as intended. This granular approach not only accelerates the development process but also contributes to the overall reliability and stability of the Kafka streaming architecture.

To be more specific,

=> Testing Transformers:

- Kafka streams rely heavily on transformers to manipulate and process data. Unit tests for these components should cover scenarios where input data is transformed correctly and output adheres to the expected format.

- Mocking is a valuable technique here, enabling you to isolate the unit under test and simulate various input scenarios.

- In Kafka streaming applications, Scala Spark code often involves intricate data transformations. Unit tests provide a mechanism to validate that these transformations are executed accurately, ensuring the integrity of your data processing logic.

- Scala Spark code in Kafka streaming frequently incorporates operations, like windowed aggregations. Unit tests enable you to verify that your stateful operations behave correctly and that the application maintains the expected state across time windows.

=> Fault Tolerance and Recovery:

- Spark streaming applications need to be resilient to failures and capable of recovering gracefully. Unit tests allow you to simulate failure scenarios and assess how your Spark code reacts, ensuring that your application can recover and resume processing without data loss.

=> Error Handling:

- Unit tests should cover scenarios where errors may occur, such as network issues or unexpected data formats. Assessing how your Kafka streaming application handles these situations is crucial for robustness.

=> Integration with External Systems:

- Many Kafka streaming applications interact with external systems, databases, or APIs. Unit tests provide an opportunity to validate these interactions, ensuring that data is ingested, processed, and outputted correctly, even when interacting with external dependencies.

Tips for Kafka Streaming Unit Tests in with Scala Spark

=> Using the ETL structure:

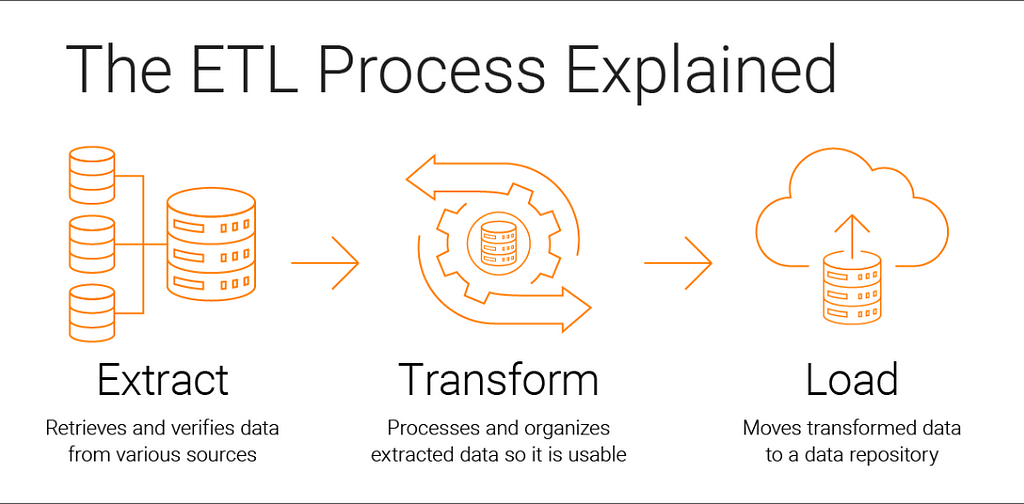

Photo Credits: Informatica

Photo Credits: InformaticaSplit your code into Extractor, Transformer and Loader so that you can perform precise unit testing on the logic. Extractors can be used to attain realistic data to test the logic and loaders can be used wisely to examine the expected format and correctness of the data.

=> Test Spark Streaming Logic:

class StreamingTransformerSpec extends BaseSpec {

s"StreamingTransformerSpec" should "create the data frame that is equal to expected Df" in {

val spark = SparkIOUtil.spark

// Input dataframes

val KafkaDataPath: String = paramsMap(SC.TEST_BASE_PATH).toString.concat("StreamingTransformer/input/kafkadata")

val KafkaData = spark.read.json(KafkaDataPath)

// Expected dataframes

val ExpectedKafkaDataPath: String = paramsMap(SC.TEST_BASE_PATH).toString.concat("StreamingTransformer/expected/ActualKafkaDataDF")

val ExpectedKafkaData = spark.read.schema(TC.kafkatestschema).json(ExpectedKafkaDataPath)

val dataFrameMap: Map[String, DataFrame] = Map(

SC.KAKFASOURCE -> KafkaData

)

// Getting the output from transformer by passing the input dataframes

val outActualMap: Map[String, DataFrame] = new TestTransformer().transform(paramsMap, dataFrameMap, libraryObjects)

val ActualKafkaDataDF = outActualMap(OutputDf)

assertDataFrameEquals(ActualKafkaDataDF, ExpectedKafkaData)

}

}Ensure that your unit tests cover the logic within the Spark streaming context. This includes transformers, windowed operations, etc. Whenever possible, use realistic data in your unit tests. This helps uncover potential issues that may arise with actual production data.

=> Simulate Real-Time Scenarios:

KafkaDf.writeStream

.format("csv")

.option("format","append")

.option("checkpointLocation", "checkpointLocation")

.option("path", "path_of_csv_file")

.outputMode("append")

.start()

Spark streaming is designed for real-time data processing. Create unit tests that simulate real-time scenarios by ingesting data in a manner that mirrors your production environment. This helps identify potential issues specific to the streaming nature of your application.

You can use the above script to get your data stream into a CSV for a realistic — test case.

=> Test Error Handling:

Spark streaming applications should gracefully handle errors and exceptions. Design unit tests to evaluate how your Scala Spark code reacts to erroneous inputs, network failures, or other exceptional conditions.

=> Integrate with Continuous Integration (CI):

Embed your unit tests into your CI/CD pipeline to automatically validate changes. This ensures that any modifications to your Scala Spark code are thoroughly tested and won’t introduce regressions.

=> Monitor and Analyze Test Results:

Regularly monitor and analyze the results of your unit tests. Identify patterns of failures and continuously refine your tests to cover edge cases and potential pitfalls.

Unit testing is a linchpin in the development lifecycle of Kafka streaming applications. By rigorously testing individual components, handling various scenarios, and leveraging the right tools, you can enhance the reliability and maintainability of your Kafka streaming architecture. As you embark on your Kafka journey, remember: robust unit tests pave the way for seamless and error-resistant data flows.

Ensuring Reliability: Unit Testing Kafka Streaming with Scala Spark Code was originally published in Walmart Global Tech Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.