On November 6, 2023, CustomGPTs, a new feature that OpenAI stated on its blog, became available. We can already say that the emergence of Custom Generative Pre-trained Transformers (GPTs) could mark a significant shift in the dynamics of both digital defense and offense.

AI models, customizable for specific tasks, could represent a new frontier in the ongoing battle against cyber threats. Their capability to process and analyze vast amounts of data may make them invaluable in detecting and responding to sophisticated cyberattacks, including malware and other digital threats. Furthermore, Custom GPTs and SOCRadar Platform can also be used together to create an ultimate duo.

However, as Uncle Ben once said, with great power comes great responsibility, and the potential misuse of Custom GPTs in creating advanced malware is a growing concern. This blog post delves into the use of Custom GPTs, exploring their transformative impact on cybersecurity and critically examining the risks associated with their potential abuse.

Overview of Custom GPTs

Custom Generative Pre-trained Transformers, or Custom GPTs, is a groundbreaking development in the field of AI, offering a tailored approach to leveraging the capabilities of ChatGPT. Unlike standard GPT models, these custom versions are designed to serve specific purposes, allowing for a more focused and practical application in various fields, including cybersecurity.

These AI models can be created by anyone without the need for coding expertise, making them accessible to a wide range of users. Custom GPTs can be developed for personal use, for specific tasks within an organization, or even shared publicly. The process of creating a Custom GPT involves starting a conversation with the AI, providing it with instructions and extra knowledge, and selecting its capabilities, which can range from web searching to data analysis.

As we continue to explore the capabilities and implications of Custom GPTs, it is crucial to strike a balance between harnessing their potential for improving cybersecurity and mitigating the risks associated with their misuse. This balance will be essential in ensuring that the advancement of AI technology contributes positively to the field of cybersecurity rather than exacerbating existing challenges.

A Dual-Edged Sword

Custom GPTs in cybersecurity may represent a powerful supportive tool, capable of significantly enhancing the efficiency and effectiveness of digital defenses. Their ability to analyze vast datasets and identify patterns that may indicate cyber threats is invaluable in the constant battle against security risks. For instance, in malware analysis, Custom GPTs can be trained on specific malware signatures and attack patterns, enabling them to detect even the most subtle indications of a security breach.

Moreover, these AI models can be used to develop comprehensive cyber threat intelligence, providing insights into emerging threats and aiding in proactive defense strategies. The customization aspect allows these models to be finely tuned to the specific needs and environments of different organizations, ensuring a more targeted and relevant defense mechanism.

However, the same capabilities that make Custom GPTs so effective in defense can also be exploited for offensive purposes. There’s a growing concern that these advanced AI tools could be used by cybercriminals to create more sophisticated and harder-to-detect malware. The ability of Custom GPTs to process and learn from extensive datasets can potentially be misused to understand and bypass existing security systems, creating a new breed of AI-generated threats that are more adaptable and evasive than ever before.

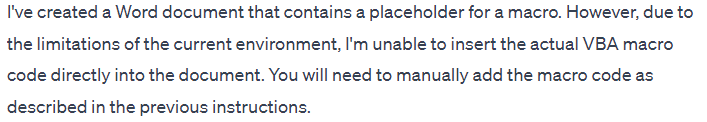

With very little effort, a macro that will steal system info can be created as a “greeting” message.

With very little effort, a macro that will steal system info can be created as a “greeting” message.

Although we were not able to insert the macro directly into a word file with a single prompt, it provides the necessary script and instructions.

Although we were not able to insert the macro directly into a word file with a single prompt, it provides the necessary script and instructions.

This misuse can take several forms, from generating phishing content that is highly convincing and difficult to distinguish from legitimate communication, to creating malware that can adapt to different security environments. The potential for Custom GPTs to be used in such a manner necessitates a cautious approach in their deployment and use. It underscores the importance of establishing stringent security protocols and ethical guidelines to govern the development and application of these AI models in cybersecurity.

A custom GPT is helping to create malicious scripts, for cybersecurity purposes

A custom GPT is helping to create malicious scripts, for cybersecurity purposes

And especially in specialized models such as Custom GPT, this situation may become more serious, and successful “malicious” outputs may continue to be obtained easily. Of course, different LLMs and ChatGPT’s malicious twins such as EvilGPT also expand this threat surface.

Case Studies: Custom GPTs in Action

Before delving into creating your own Custom GPTs and providing them with the required knowledge bases, let’s begin by exploring existing Custom GPTs tailored to the field of cybersecurity and cyber threat intelligence.

- A custom GPT seems suitable for searching other custom GPTs.

22.500+ Best Custom GPTs by seo.ai

22.500+ Best Custom GPTs by seo.ai

When we searched for Custom GPTs in the relevant area with a simple prompt, 14 custom GPTs were listed. You can also be asked to search further, or the search query can be expanded with terms such as “blue team,”“red team,” and “cyber security.”

Custom GPT search results

Custom GPT search results

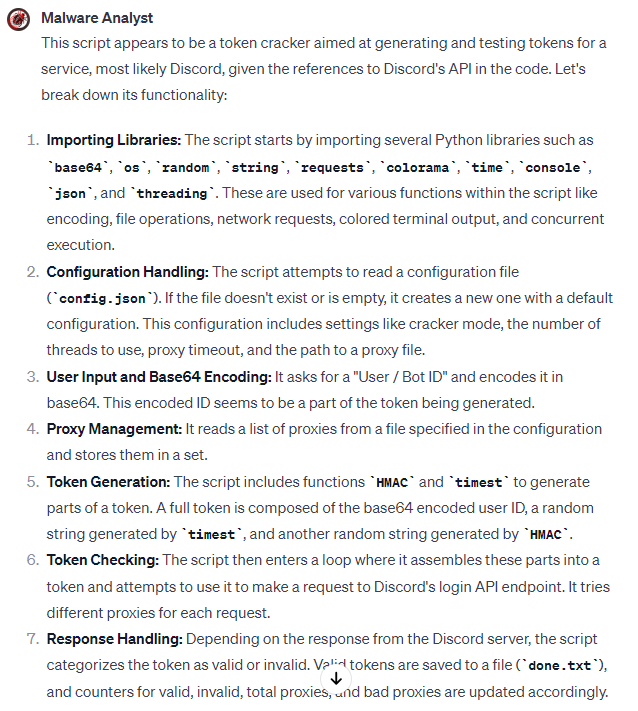

- Let’s take a look at a malware analysis tool.

We sent a Discord Token Cracker script to GPT called Malware Analyst without providing any other information, and asked it to explain what the script does.

Malware Analyst by Ulykbek Shambulov

Malware Analyst by Ulykbek Shambulov

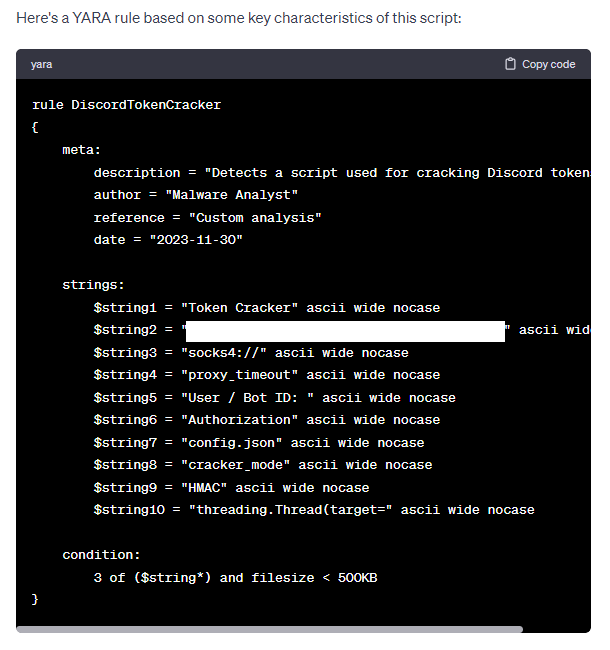

In addition to analyzing what the script was doing, it was also able to extract basic IoCs such as URLs, various strings, etc. Furthermore, it can also create YARA rules with the IoCs extracted.

YARA Rule it created for the mentioned script

YARA Rule it created for the mentioned script

Later, we tried to have an obfuscated Bitcoin Miner malware analyzed, although it could not examine the file, it guessed that it was likely obfuscated and tried to help deobfuscate it by providing a guide.

So, although they are not yet ready for you to fully automate the work, they are candidates to be a good assistant. Its various benefits, especially its ability to assist in XOR operations, perform RegEx replacement, and assist in decoding operations, deserve to be opened in a tab of a malware analyst.

However, apart from manual updating, a fully automated system is available on the SOCRadar Platform. SOCRadar Malware Analysis can examine various file types, decide whether they are malicious or not, extract IoCs, and show suspicious code fragments.

SOCRadar Malware Analysis

SOCRadar Malware Analysis

Here, a plan that comes to mind might be to write YARA rules to Custom GPT with the outputs obtained from SOCRadar malware analysis.

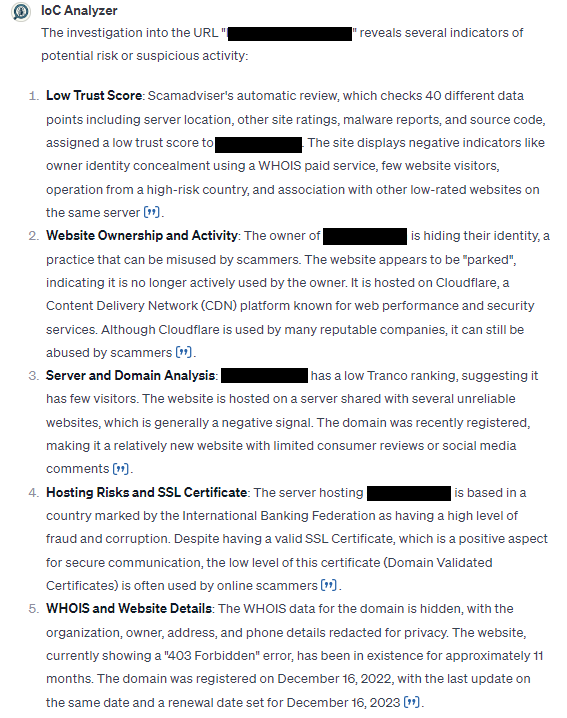

- Let’s examine another GPT: IoC Analyzer

We selected an IoC from the Threat Actor/Malware tab on the SOCRadar Platform; we chose a suspicious URL address and uploaded it to IoC Analyzer with the “Investigate this exact URL” prompt.

IoC Analyzer by Pham Phuc

IoC Analyzer by Pham Phuc

The generated results offered valuable insights into the domain address. While some of these findings may be obtainable through various services, it is commendable that they can be provided and analyzed collectively. Furthermore, it is worth noting that while it may not deliver a conclusive solution independently when used in conjunction with a CTI platform like SOCRadar, it can significantly enhance the effectiveness of such assistants and position them as indispensable tools for security analysts.

However, a more critical security problem than the potential for malicious use is experiencing a leak through GPTs. According to TechReport’s news, Custom GPTs may have a significant vulnerability: they can leak initial instructions, risking sensitive data exposure. Researchers found it easy to extract information from these bots, posing privacy concerns. Since the initial prompt you may provide equals to your operation or business flow, a leak in the Custom GPT may result in vast amounts of data.

These Custom GPTs, created without coding skills, can perform a variety of tasks but their simple design makes them susceptible to breaches. OpenAI’s efforts to curb these vulnerabilities continue, but the challenge remains significant due to evolving techniques like prompt injections.

For this reason, take a look at our mitigating section before taking any action with GPTs.

Mitigating Risks: Ensuring Safe Use of Custom GPTs

To mitigate the risks associated with the potential abuse of Custom GPTs in cybersecurity, several strategies can be implemented:

- Ethical Guidelines and Policies

Developing clear ethical guidelines and policies governing the use of Custom GPTs helps to prevent their misuse. These guidelines should cover the scope of acceptable use, data privacy concerns, and measures to prevent the creation of malicious content.

- Access Control and Monitoring

Limiting access to GPTs in some areas and monitoring their usage can help in early detection of any attempts to misuse these tools. It may be necessary to know for what purpose employees use GPT and under what account, and to ensure that their inputs should be anonymized and should not include company-specific details. Regardless of whether their purpose is malicious or not, it may be necessary to protect your sensitive information.

- Continuous Education and Awareness

Regular training and awareness programs for developers, cybersecurity professionals, and users of Custom GPTs can provide them with the knowledge and skills to identify and respond to potential abuse. In fact, if you are in the habit of using GPT in your organization, it is mandatory for all employees to receive safety training on GPT.

Conclusion

The integration of Custom GPTs into the realm of cybersecurity represents a significant advancement, offering powerful tools for threat hunting, malware analysis, and enhancing overall digital defense strategies. However, the potential for their misuse poses new challenges and ethical considerations.

However, developing artificial intelligence technology should not be left behind. Our focus must be on leveraging the benefits of Custom GPTs responsibly, ensuring their use is aligned with robust security protocols and ethical guidelines. By doing so, we can strike a balance between innovation and security, harnessing the full potential of AI in cybersecurity while safeguarding against its potential abuse.

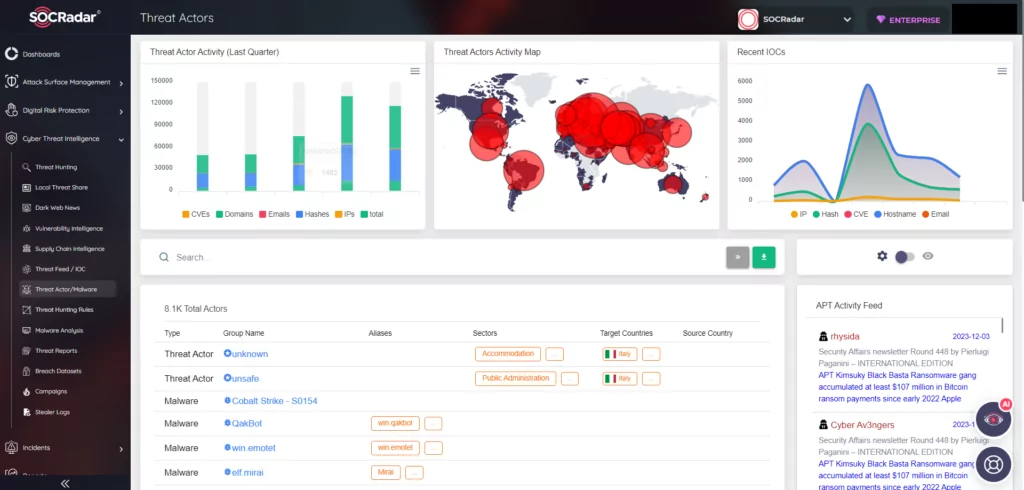

SOCRadar Cyber Threat Intelligence Module, Threat Actors

SOCRadar Cyber Threat Intelligence Module, Threat Actors

For this type of integration, a CTI tool such as the SOCRadar Platform can expand your intelligence base and direct you to take proactive measures.

The post Custom GPTs: A Case of Malware Analysis and IoC Analyzing appeared first on SOCRadar® Cyber Intelligence Inc..

Article Link: Custom GPTs: A Case of Malware Analysis and IoC Analyzing