In the thrilling world of software architecture and concurrency, we often find ourselves juggling between the urge to maximise efficiency and the need to prevent system meltdowns. Imagine orchestrating a symphony of service calls, each with its own tempo, while making sure none of the performers drop their rhythm. This is where the Bulkhead pattern with rate limiting waltzes into the limelight, promising to keep your services in harmony without breaking a sweat.

Unveiling the Act — The Bulkhead Pattern:

In the grand theatre of software architecture, the Bulkhead pattern stands tall as a safeguard against cascading failures. Imagine a ship with multiple compartments, or “bulkheads,” that prevent a leak in one compartment from sinking the entire vessel. Similarly, in our digital universe, services are isolated into “bulkheads,” ensuring that if one service falters, others can continue to function without skipping a beat. With rate limiting as its dance partner, the Bulkhead pattern adds grace by ensuring each service gets to twirl on the dance floor at its own pace.

Kotlin Choreography — The Code Implementation:

Pull out your Kotlin dancing shoes as we embark on crafting this elegant routine. In a virtual dance studio, our performers are coroutines. Using Kotlin’s coroutine magic, we line up three services and launch their calls into the air like synchronised trapeze artists. With a touch of delay and a sprinkle of async, we let each service breathe within its own tempo. The result? A mesmerising performance where services elegantly balance between speed and restraint.

Setting the Tempo — Taming the TPS:

Tap into the heart of the matter — regulating the Transaction Per Second (TPS) for each service. Just like giving each service a metronome, we calculate the interval each service should wait between its steps. This ingenious synchronisation ensures that no service steps on the other’s toes, preventing a chaotic collision of requests.

val startTime = System.currentTimeMillis()

var result = emptyMap<String, Pair<Double, Char>>()

// my super slow process execution starts

try {

val someResponse = client.getDetailsFromClientA(someRequest)

} catch(e: Exception) {

//Perform error handling here:

println("Oops! Something crashed.")

}

// my super slow process execution ends

val elapsedTime = System.currentTimeMillis() - startTime

val remainingTime = tpsCapMillis - elapsedTime

if (remainingTime > 0) {

delay(remainingTime.toLong())

}

This will ensure the external services are not pushed into meltdown by limiting our service calls.

Taking inspiration from nature, where we have different streams flowing at their own pace, our application can efficiently rate the TPS of each external service interaction for optimal performance. Image by wirestock on Freepik

Taking inspiration from nature, where we have different streams flowing at their own pace, our application can efficiently rate the TPS of each external service interaction for optimal performance. Image by wirestock on FreepikThe Batching Ballet — Dance of Efficiency:

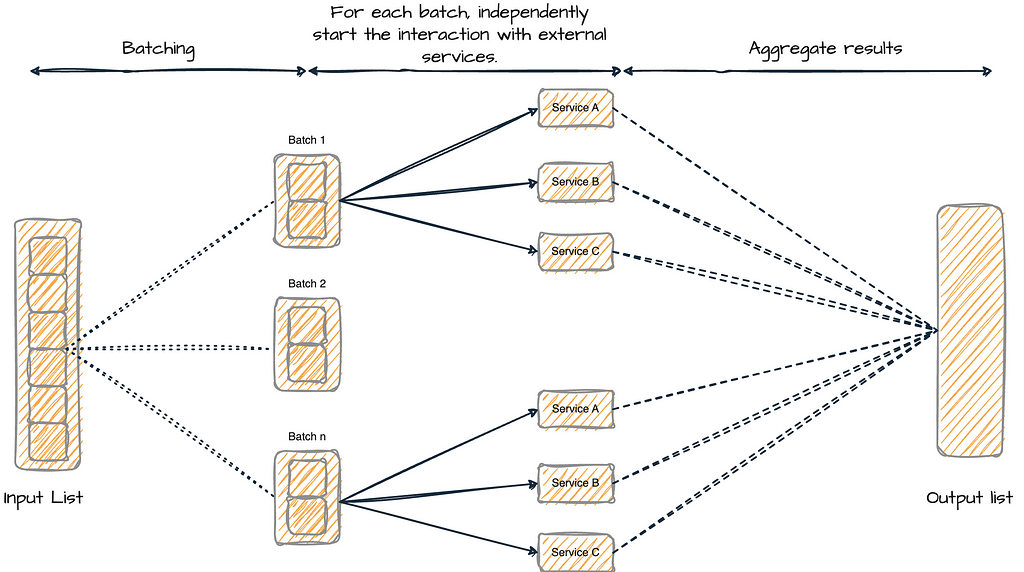

Enter the beauty of batching — a clever balletic move to optimise efficiency. Instead of chaotically sending each request individually, we bring the dancers together in small groups. Each group elegantly glides through their moves, minimising the number of API calls per service. This choreography lets the stage breathe while keeping the audience enthralled.

And yeah, this assumes the external service can indeed support batching, else this bit will go for a toss!

Here’s the full code that does batching and concurrent calls to the external services:

package my.awesome.kotlin.code.services

import kotlinx.coroutines.delay

// import ...

class ServiceA {

private val client: ClientA = ClientA()

suspend fun getDetailsFromClientA(

itemDataList: List<List<ItemData>>, maxTps: Double

): Map<String, Pair<Double, Char>> {

val tpsCapMillis = 1000L / maxTps

val clientAResponseMap = mutableMapOf<String, Pair<Double, Char>>()

println("Fetching data from External Client 1")

try {

for (batchPayload in itemDataList) {

val startTime = System.currentTimeMillis()

var result = emptyMap<String, Pair<Double, Char>>()

try {

val batchResponse = client.getDetailsFromClientA(batchPayload)

result = populateFromServiceA(batchResponse)

} catch (e: Exception) {

// TODO: Perform error handling here:

println("Error occurred: ${e.message}")

}

clientAResponseMap.putAll(result)

val elapsedTime = System.currentTimeMillis() - startTime

val remainingTime = tpsCapMillis - elapsedTime

if (remainingTime > 0) {

delay(remainingTime.toLong())

}

}

} catch (e: Exception) {

// TODO: Perform error handling here:

println("Error occurred: ${e.message}")

} finally {

client.close()

}

// Returning the consolidated map

println("Data fetched from External Client 1 for ${clientAResponseMap.size} items")

return clientAResponseMap

}

private fun populateFromServiceA(

clientResponseA: ClientResponseA

): Map<String, Pair<Double, Char>> {

/*

Creating a map here of the necessary details. Alter this implementaion as per your own requirement.*/

return clientResponseA.response.associate { it.rollNo to Pair(it.chemistryMarks, it.physicsMarks) }

}

}

The Grand Finale — Collating the Crescendo:

As the dance of requests concludes, the spotlight shifts to our final act — collation. Just as the branches of a tree converge to form its trunk, we gather the results from each service. Here, we witness the genius of orchestration, as the distinct voices of each service blend harmoniously into the grand crescendo of the final response list.

Encore with Async — Merging with Efficiency:

Picture a branch and merge strategy, where the branches are asynchronous calls and the merge is the final response. This strategy delivers the best of both worlds: the efficiency of async calls and the diligence of monitoring API speed. The curtain falls as our well-coordinated ballet of concurrency and orchestration leaves the audience amazed.

Conclusion — A Standing Ovation for Concurrency:

As we bid adieu to our bulkhead-inspired ballet, we’re left with a standing ovation for concurrency’s intricate artistry. The Bulkhead pattern with rate limiting and Kotlin’s coroutines waltz together to ensure that our services, like seasoned dancers, perform flawlessly while adhering to their individual rhythms. Remember, in the realm of software, just like in a theatre, it’s the balance of precision and creativity that truly steals the show.

Chronicles of Controlled Chaos: Crafting Bulkhead Carnival with Concurrency in Kotlin was originally published in Walmart Global Tech Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.