Building Question-Answering Chatbots on private Knowledge bases using RAG

What if I tell you that you can build your own GPT, customized on your lengthy documents that are difficult to go through, to find simple answers!

Sure, but wouldn’t it be hard and burn my pocket to design something like a GPT?

No, it wouldn’t!

In this blog, we will outline an approach that enables you to build chatbots that are cost-effective (you don’t even need GPUs!), accurate and keep your data safe and not leak to the internet.

Introduction

We all have heard about GPTs, haven’t we? If you want answers to your basic doubts, prepare itineraries for your next travel destination, or get recipes for your favourite dishes, use GPTs for your rescue. But what exactly is behind the scenes?

GPT stands for Generative Pretrained Transformer, an AI model popularly known as Large Language Models (LLMs). LLMs are called Large because these models have billions of parameters (think of an algebraic equation with billions of variables, the coefficients to these variables are parameters). For example, GPT-3.5 is trained on 570GB of data from books, Wikipedia, web text etc. (Reference). Essentially, the model has seen a substantial amount of world data, encoding knowledge into its parameters, and this is the reason why GPTs perform so well that it’s considered an alternative to Google Search.

However, GPTs can only answer questions based on the data it has seen, i.e., from the data available to it before the training is completed, also known as the cut-off date. Suppose a user asks for information about events that happened post-cut-off date. In that case, GPTs can fabricate answers that can be wrong and misleading, a phenomenon referred to as hallucinations in the context of LLMs.

What if we need a chatbot built on personal data? Should we expose our sensitive data to OpenAI APIs to fit its models?

What if we need a custom chatbot that has no cut-off date? What if we want to build a chatbot that lists top news items for the day?

How do we ensure that our chatbot is robust to hallucinations?

Additionally, it’s nearly impossible to train models frequently. Training LLMs from scratch require months and an enormous amount of computing which costs millions of dollars, not to mention the per input-output token cost of GPTs post model finetuning.

How do we build our own GPT that addresses the above issues? This is where Retrieval Augmented Generation (RAG) enabled customized chatbots come into the picture. Before diving into the steps of building these, let’s first understand its key components.

Retrieval Augmented Generation (RAG) for building Customized Chatbots

Let’s take an analogy!

Say my friend asks me about Cricket World Cup 2023. If I don’t know about it, I will try to read about it online or watch some videos on YouTube. Essentially, I will try to refer to some sources to gain relevant information about the question being asked.

Similarly, as users, we pass the query through prompts to LLMs, but what if we also pass the reading material (or context) additionally, which the LLM chatbot can refer to answer its question? In the example of listing news items for the day, if we pass the model a link which it can use to reference news items, the model can paraphrase it according to the user’s query. This paradigm of building chatbots is called Retrieval Augmented Generation, abbreviated as RAG since we are augmenting our generated response with the help of some retrieved information.

RAG pipelines typically consist of two models:

1. Embedding model: It extracts embeddings for document chunks and stores them in the Vector Database for retrieval during inference. We have used the BAAI/bge-small-en model, by the Beijing Academy of Artificial Intelligence, downloaded from HuggingFace. For the Vector Database, we are using the FAISS (Facebook AI Similarity Search) Index.

2. Language model: It runs across the prompt, where the query is augmented with retrieved context. We are using LLaMA 2–13B downloaded from the official website.

In the following section, we will understand the approach behind building a RAG-based chatbot. The approach is generic towards building a Q/A Chatbot for different use cases. We only need to change the documents about a use case i.e., modify the document store corresponding to the use case while everything else remains the same.

Approach

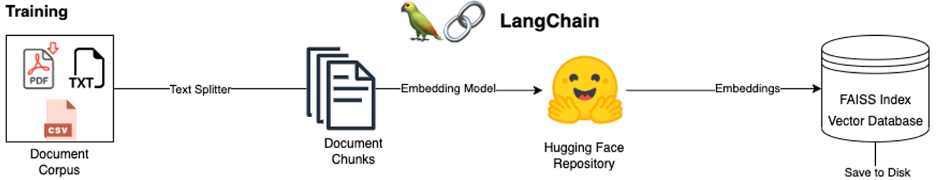

The approach is divided into two sections: Training and Inference.

Training:

This step aims to build the Vector Database that serves as a model artifact. We are not training or finetuning a model in this step.

1. Building Document Store: Putting all files (CSV/TXT/PDF) in a single folder.

2. Splitting Documents: Like how we eat our food in the form of bites and do not gulp everything on our plate in one go, a model also ‘eats’ its data in bites. Hence, we must split documents into chunks for the model to digest. Say if we had 10 documents, they would be converted to 72 chunks.

3. Generating Chunk Embeddings: The chunks generated are converted into embeddings, one corresponding to each chunk. We will use an embedding model, typically lighter than the actual language model, to convert chunks into embeddings.

4. Storing embeddings into Vector Database: The embeddings are stored in the FAISS (Facebook AI Similarity Search) Index on disk. This serves as a model artifact generated during the Training phase.

Figure 1: Training Pipeline

Figure 1: Training PipelineInference:

The model is wrapped around a Front-End application and deployed. As a user types a query, the following steps occur:

1. Loading Index to memory from Disk: Load index as model artifact into the memory.

2. Converting Query to Query Embedding: Passing query through the embedding model to generate Query Embedding. It is important to note that the model used here is the same embedding model used to create chunk embeddings. This is because the query embedding is compared with chunk embeddings in subsequent steps. Hence, they need to be in the same dimensional or reference space i.e., they need to come from the same tokenizer, and embedding model and with the same vocabulary (collection of possible tokens).

3. Finding relevant chunks using Similarity Search: Using Approximate Nearest Neighbors (ANN) algorithm like Annoy, the query embedding is compared against chunk embeddings through a distance metric like Cosine Similarity. This is followed by chunks ranked in decreasing order according to similarity score. The Top N results are considered to be N Nearest Neighbours. These chunks serve as the context for the query.

4. Defining Prompt Template: Such as “Given query {query} and context {context}, answer the query. If you don’t know the answer, just say you don’t know, don’t try to make up an answer.”

Figure 3: Poetry [TOML] file to specify dependencies

Figure 3: Poetry [TOML] file to specify dependenciesStep 1: Installing Dependencies and setting environment

To run the code, we will setup a poetry environment first.

1. Initiate a poetry environment: poetry init

2. Configure pyproject.toml to include the following dependencies:

[tool.poetry.dependencies]

python = ">=3.8.1,<4.0"

langchain = "^0.0.272"

llama-cpp-python = "^0.1.78"

sentence-transformers = "^2.2.2"

pdfminer-six = "^20221105"

faiss-cpu = "^1.7.4"

[tool.poetry.group.dev.dependencies]

flake8 = "^3.9.2"

flake8-docstrings = "^1.6.0"

isort = "^5.9.3"

black = "21.5b2"

click = "8.0.4"

Step 2: Asking a question on original LLaMA-2 model

from langchain.callbacks.manager import CallbackManager

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

from langchain.llms import LlamaCpp

llm = LlamaCpp(

model_path="models/llama-2-13b-chat.ggmlv3.q4_0.bin",

n_gpu_layers=0,

n_batch=512,

n_ctx=2048,

f16_kv=True,

callback_manager=CallbackManager([StreamingStdOutCallbackHandler()]),

verbose=False,

)

if __name__ == "__main__":

query = input("Enter a query: ")

print("Generating response...")

response = llm(query)

print(response)

Step 3: Building FAISS Index

import os

from langchain.document_loaders import PDFMinerLoader

from langchain.embeddings import HuggingFaceEmbeddings

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.vectorstores import FAISS

from tqdm import tqdm

if __name__ == "__main__":

data_path = "document_store_data"

corpus = []

for f in tqdm(os.listdir(data_path)):

if ".pdf" in f:

file_path = data_path + "/" + f

loader = PDFMinerLoader(file_path)

pages = loader.load()

corpus += pages

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=1000,

chunk_overlap=200,

)

texts = text_splitter.split_documents(corpus)

print("Number of documents: ", len(corpus))

print("Number of chunks: ", len(texts))

print(texts[0].page_content)

# building index

embed_model = HuggingFaceEmbeddings(model_name="BAAI/bge-small-en")

# FAISS Index

db = FAISS.from_documents(texts, embed_model)

db.save_local("faiss_index")

from langchain.callbacks.manager import CallbackManager

from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

from langchain.chains import ConversationalRetrievalChain

from langchain.embeddings import HuggingFaceEmbeddings

from langchain.llms import LlamaCpp

from langchain.memory import ConversationBufferMemory

from langchain.vectorstores import FAISS

llm = LlamaCpp(

model_path="models/llama-2-13b-chat.ggmlv3.q4_0.bin",

n_gpu_layers=0,

n_batch=512,

n_ctx=2048,

f16_kv=True,

callback_manager=CallbackManager([StreamingStdOutCallbackHandler()]),

verbose=True,

)

if __name__ == "__main__":

# loading index

embed_model = HuggingFaceEmbeddings(model_name="BAAI/bge-small-en")

db = FAISS.load_local("faiss_index", embed_model)

memory = ConversationBufferMemory(memory_key="chat_history", return_messages=True)

qa = ConversationalRetrievalChain.from_llm(

llm=llm, retriever=db.as_retriever(return_source_documents=True), memory=memory

)

query = input("Enter a query: ")

print("Generating response...")

response = qa.run(query)

print(response)

Results

Extending the earlier mentioned example, let’s compare LLaMA-2 outputs with and without RAG.

One of the biggest highlights of 2023 was the Cricket World Cup, but does LLaMA-2 know about it?

Let’s find out!

On asking the Open-Source LLaMA-2 about Cricket World Cup 2023, it says that the Final is scheduled for 28th April 2023, which is incorrect. See below:

Figure 3: LLaMA-2 output

Figure 3: LLaMA-2 outputHowever, if we pass the model, information from the page 2023 Cricket World Cup, the model can retrieve information from it and answer correctly that the Final happened on 19th November 2023. Additionally, it is also able to inform that Australia won the cup. See below:

Figure 4: LLaMA-2 output with RAG

Figure 4: LLaMA-2 output with RAGConclusion

In this blog, we have looked at the limitations of GPT with private knowledge bases and the need to create customized GPTs. Through an example, we noticed how Retrieval Augmented Generation (RAG)-based models can answer questions on events after the model’s cut-off date (LLaMA 2 was last trained in July 2023) accurately by referring to the knowledge base. This demonstrates how we can customize existing open-source LLMs for any lengthy documentation it has not seen, with limited compute resources, thereby reducing the need for exposure to the external APIs.

Tags: Retrieval Document Generation (RAG), Generative AI, Chatbots, LLaMA, Natural Language Processing, LLMs

Sources: Icons are taken from The Noun Project and Google, and images are drawn using Draw.io

Build your own GPT (BYO-GPT) was originally published in Walmart Global Tech Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.