Photo Credit: https://pixabay.com/vectors/presentation-statistic-boy-1454403/

Photo Credit: https://pixabay.com/vectors/presentation-statistic-boy-1454403/

Table of Contents

- Overview

-

Understanding of Azure Cosmos

- Billing and Request Units (RUs)

- Physical Partition -

Optimization Techniques

- Manual vs. Auto scale

- Programmatically Allocate RUs in Manual Mode

- Optimising Document Size

- Optimise Physical Partition Usage - Conclusion

Overview

In the ever-evolving world of app development, many fully managed NoSQL and relational database services play a very important role in modern applications development. With automatic management, updates and patching, it takes the burden of database administration off developers’ shoulders. The platform also offers automatic scaling options and capacity management to ensure resources are efficiently allocated.

Azure Cosmos DB (Database) is one of such cloud database providers, its key features are support for MongoDB, making it an attractive choice for those familiar with the database system. This guide aims to delve into the intricacies of Azure Cosmos DB, helping you understand its billing, Request Units (RUs) and capacity management options, as well as tips for optimising document size and physical partition usage.

Understanding of Azure Cosmos

Billing and Request Units (RUs)

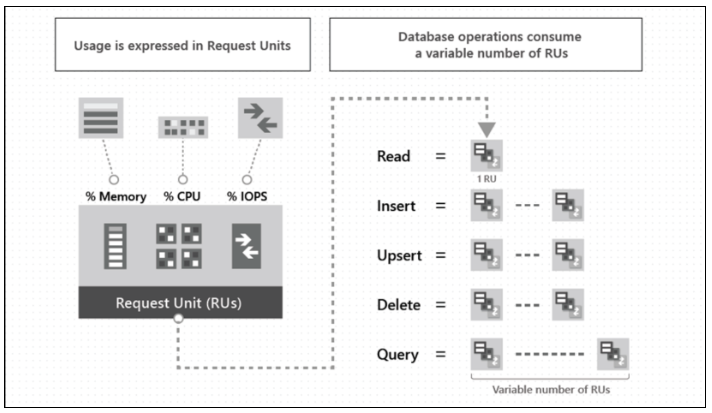

- Azure Cosmos DB billing is based on the throughput you specify in Request Units per second (RU/s). RUs represent the compute, memory and IO resources needed to execute database operations and are billed across all selected Azure regions for your Azure Cosmos DB account. You are billed for compute, storage and bandwidth usage.

- The cost for a point read operation (fetching a single item by its ID and partition key value) for a 1-KB item is one RU (Request Unit).

- While write operations require 5.5 times and UPSERT require 11 times the read RUs.

- The following image shows the high-level idea of RUs:

Photo Credit: https://learn.microsoft.com/en-us/azure/cosmos-db/media/request-units/request-units.png

Photo Credit: https://learn.microsoft.com/en-us/azure/cosmos-db/media/request-units/request-units.pngPhysical Partition

- Azure Cosmos DB uses partitioning to scale containers based on performance needs.

- The items in a container are divided into distinct subsets called logical partitions. Logical partitions are formed based on the value of a partition key that is associated with each item in a container. All the items in a logical partition have the same partition key value.

- Internally, one or more logical partitions are mapped to a single physical partition. Typically smaller containers have many logical partitions but they only require a single physical partition. Unlike logical partitions, physical partitions are an internal implementation of the system and Azure Cosmos DB entirely manages physical partitions.

- Each physical partition supports a maximum of 50 GB of storage and 10,000 RU/s.

- New physical partitions are created when scaling exceeds these limits.

- Physical Partitions cannot be merged or reduced once created, even if RUs decrease or data is deleted.

- More partitions lead to fewer RUs per partition and higher consumption.

- If a collection scales beyond the maximum limit, Cosmos will divide it and create new physical partitions.

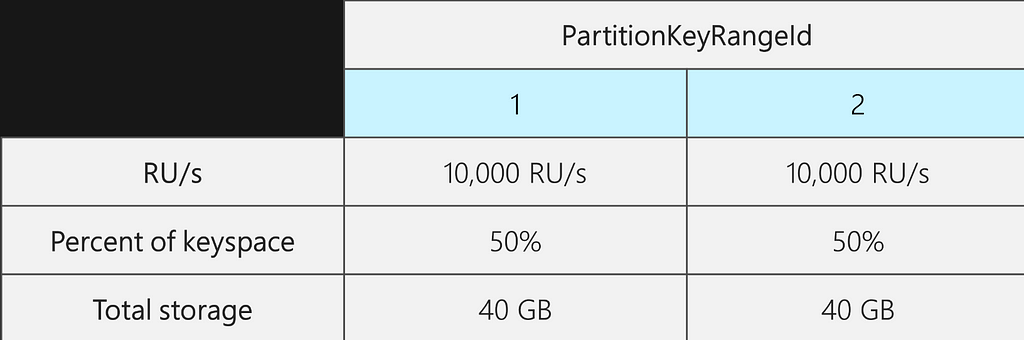

- Let us take an example where we have an existing container with 2 physical partitions, 20,000 RU/s and 80 GB of data.

Photo Credit: https://learn.microsoft.com/en-us/azure/cosmos-db/media/scaling-provisioned-throughput-best-practices/diagram-2-uneven-partition-split.png

Photo Credit: https://learn.microsoft.com/en-us/azure/cosmos-db/media/scaling-provisioned-throughput-best-practices/diagram-2-uneven-partition-split.png- Now, suppose we want to increase our RU/s from 20,000 RU/s to 30,000 RU/s.

- If we simply increased the RU/s to 30,000 RU/s, only one of the partitions will be split. After the split, we will have:

- One partition that contains 50% of the data (this partition was not split)

- Two partitions that contain 25% of the data each (these are the resulting child partitions from the parent that was split)

- Because Azure Cosmos DB distributes RU/s evenly across all physical partitions, each physical partition will still get 10,000 RU/s. However, we now have a skew in storage and request distribution.

Photo Credit: https://learn.microsoft.com/en-us/azure/cosmos-db/media/scaling-provisioned-throughput-best-practices/diagram-2-uneven-partition-split.png

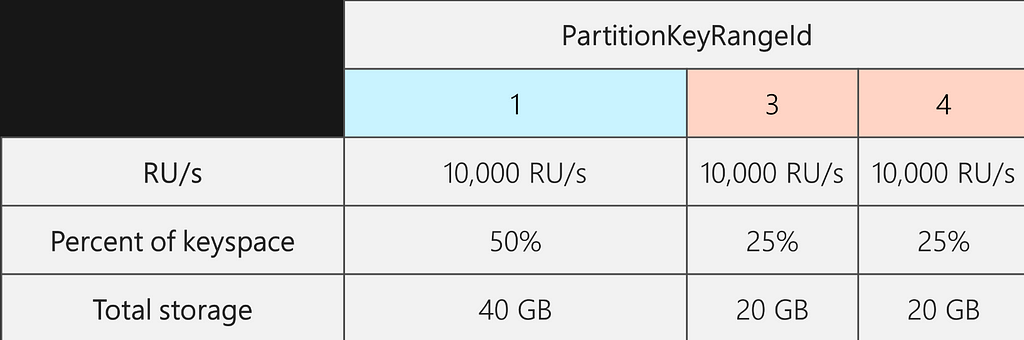

Photo Credit: https://learn.microsoft.com/en-us/azure/cosmos-db/media/scaling-provisioned-throughput-best-practices/diagram-2-uneven-partition-split.png- To maintain an even storage distribution, we can first scale up our RU/s to ensure every partition splits. Then, we can lower our RU/s back down to the desired state.

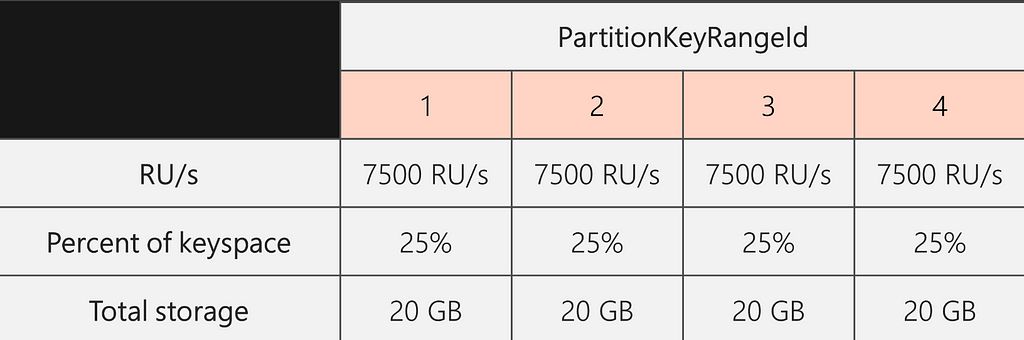

- So, if we start with two physical partitions, to guarantee that the partitions are even post-split, we need to set RU/s such that we will end up with four physical partitions. To achieve this, we will first set RU/s = 4 * 10,000 RU/s per partition = 40,000 RU/s. Then, after the split completes, we can lower our RU/s to 30,000 RU/s.

- As a result, we see in the following diagram that each physical partition gets 30,000 RU/s / 4 = 7500 RU/s to serve requests for 20 GB of data. Overall, we maintain even storage and request distribution across partitions.

Photo Credit: https://learn.microsoft.com/en-us/azure/cosmos-db/media/scaling-provisioned-throughput-best-practices/diagram-3-even-partition-split.png

Photo Credit: https://learn.microsoft.com/en-us/azure/cosmos-db/media/scaling-provisioned-throughput-best-practices/diagram-3-even-partition-split.pngOptimization Techniques

The Optimization Techniques discussed here provide different strategies to manage capacity in Azure Cosmos DB, reduce costs and improve efficiency. These include selecting the correct capacity management option, programmatically allocating RUs, optimising document size, splitting collections, managing physical partitions, and periodically deleting stale data. Each of these techniques is detailed below.

Manual vs. Auto scale: Choosing the Right Capacity Management Option

Azure Cosmos DB offers two capacity management options for provisioned throughput: Manual and Auto scale mode.

Manual (Standard)

- In this mode, you provision a fixed RU/s, which remains static over time unless you manually adjust it. You pay for what you have configured, regardless of actual consumption. This option is ideal for workloads with steady or predictable traffic.

- The formula for calculating cost is {provisioned RU} * 0.00008 * {number of hours}.

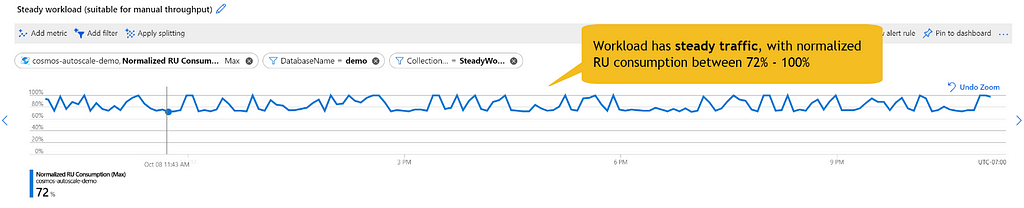

- Graph depicts stable traffic:

Photo Credit: https://learn.microsoft.com/en-us/azure/cosmos-db/media/how-to-choose-offer/steady_workload_use_manual_throughput.png

Photo Credit: https://learn.microsoft.com/en-us/azure/cosmos-db/media/how-to-choose-offer/steady_workload_use_manual_throughput.pngAuto Scale

- Auto scale allows you to set a maximum RU/s, which the system will not exceed. The system automatically scales the throughput between 400 and your specified maximum RU/s based on the workload. This is an effective solution for unpredictable workloads, like an e-commerce orders collection.

- The cost per RU/s in auto scale mode is 1.5 times the standard (manual) cost. The formula for calculating cost is {provisioned RU} * 0.00012 * {number of hours}.

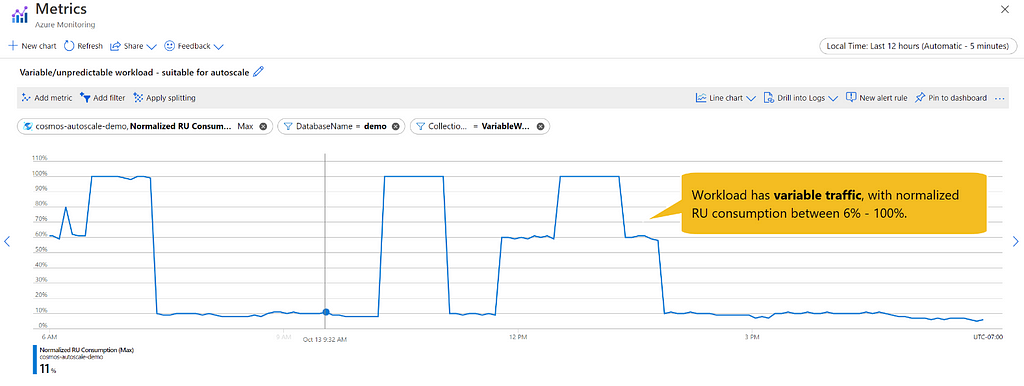

- Graph depicts variable traffic:

Photo Credit: https://learn.microsoft.com/en-us/azure/cosmos-db/media/how-to-choose-offer/variable-workload_use_autoscale.png

Photo Credit: https://learn.microsoft.com/en-us/azure/cosmos-db/media/how-to-choose-offer/variable-workload_use_autoscale.pngNote: In both Manual and Auto scale mode billing is done on a per-hour basis, for the highest RU/s the system scaled to in the hour.

For more details on pricing models, visit Microsoft Azure website https://azure.microsoft.com/en-us/pricing/details/cosmos-db/autoscale-provisioned/

Programmatically Allocate RUs in Manual Mode

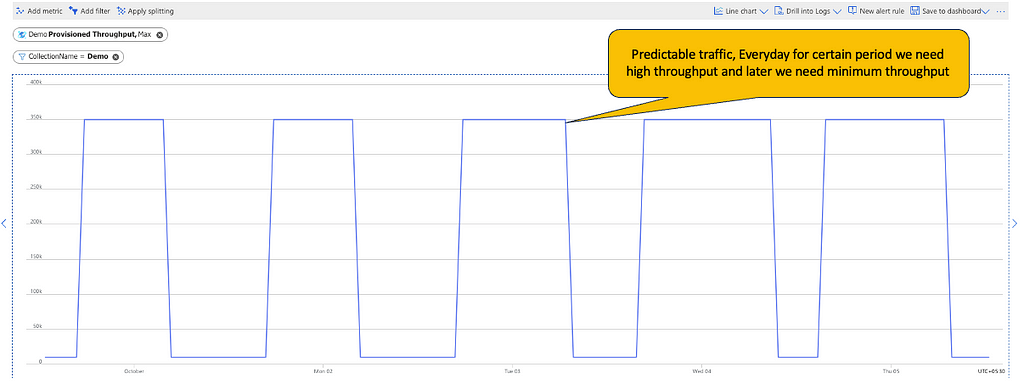

- If your workload is variable but predictable, consider manually provisioning RUs and adjusting them programmatically to save costs. For instance, if updates to a collection occur through a batch job at specific times, you can increase RUs at the start of the job and decrease them at the end programmatically.

- In the graph below, you can observe that every day for a certain period, the system requires high RUs (350K), while for the rest of the time, only 10K RUs are needed. Such case one can consider programmatically increase/decrease the RU when needed.

Note: Increasing RUs to the point of repartitioning could take additional time (1–4 hours) to the operation, potentially impacting your batch job. Alternatively, as Azure Cosmos DB uses the heuristic formula of starting RU/s to calculate the number of physical partitions to start with during container creation, set the maximum RUs needed during collection creation to take advantage of initial partitioning and reduce the RU later to avoid repartitioning in later stage.

As an alternative while creating the collection set the maximum RUs needed, with this way we can take advantage of the fact that during container creation, Azure Cosmos DB uses the heuristic formula of starting RU/s to calculate the number of physical partitions to start with. Later, decrease the RU so that hourly charges will be less. But cosmos will not reduce the partition. So, if we are increasing RU within the previously set range cosmos will immediately allocate the RU and we are good to insert the records.

Optimising Document Size

- Reducing the size of documents can significantly lower costs, as operations on larger items are more expensive. Benefits will be on save on storage space, index space, lesser partition and provisioned throughput which will result in lower costs.

- We have listed few efficient strategies for optimising document size below

Upgrade to version 4.x

- Azure Cosmos DB API (Application Program Interface) for MongoDB introduced a new data compression algorithm in versions 4.0+ that saves up to 90% on RU and storage costs.

- For more details refer to Azure official website here

Apply Compression

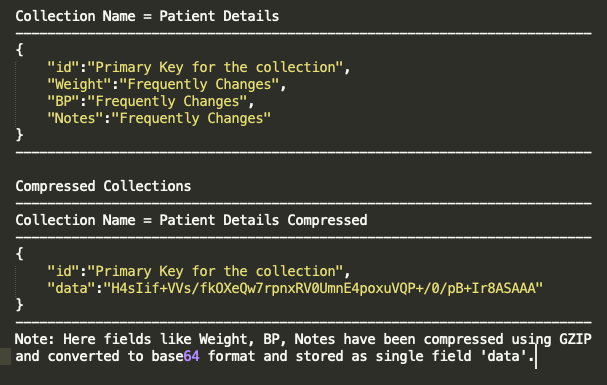

- Compress less frequently accessed fields before storing documents in MongoDB, we can leverage open-source compression formats like GZIP, ZSTD, LZ4 etc.

- This would help in significantly reducing the document size and helps us in saving the cost.

- Let us take image below as an example, where we can see that in “Patient Details” collection, an index is consistently applied to the “id” field for efficient look-up operations. To optimize storage space, the remaining fields can be maintained in a compressed format. This can be achieved by incorporating custom compression logic within the business code before writing the data to Cosmos and a corresponding decompression logic when reading the data back. This approach not only conserves storage resources but also ensures seamless data retrieval and processing.

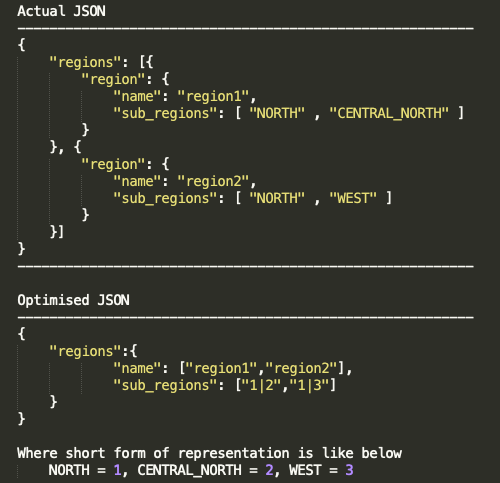

Value Mapping

- Group repetitive values and assign unique codes to reduce space consumption.

- Use 0/1 instead of TRUE/FALSE for all Boolean fields.

- Use short codes like 0/1/2 for Enum values, for example if you have Priority attribute then represent High (1)/Medium (2)/Low (3).

- Let us take the below image as an example to represent how we can optimise a JSON (JavaScript Object Notation) array representing regions.

- In our business logic, where we read this document back from the database, we need to have a logic for mapping it back to its original values. However, this additional logic could impact performance and is not advisable for time critical applications.

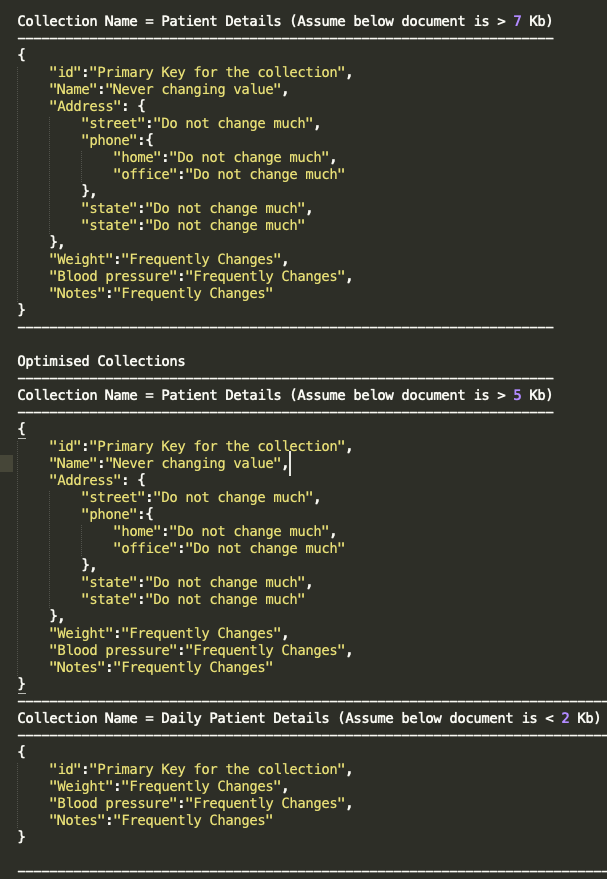

Split collection

- In the NoSQL realm, it is recommended to store all related attributes within a single document. However, in certain situations, keeping cost efficiency in mind, it may be more beneficial to divide a larger collection into multiple smaller collections.

- If you have many items and large item sizes (ex 7 KB), but a few specific set keys from these items change frequently, consider splitting your collection into two. Create a new collection with frequently updated attributes. We can use hash-based comparison to identify what all fields which are getting changed.

- A collection with a smaller item size and data that is updated frequently consumes far fewer RUs than a collection with a larger item size.

- For Example, let us say you need to maintain Patient Details collection whose size is more than 7 KB. But only few records get changed very frequently and rest of them stay same. In such cases we can split them into 2 collections so that frequently changing fields can go to 1 collection. and all other fields will go to another. This way we would need fewer RUs to update the smaller collection.

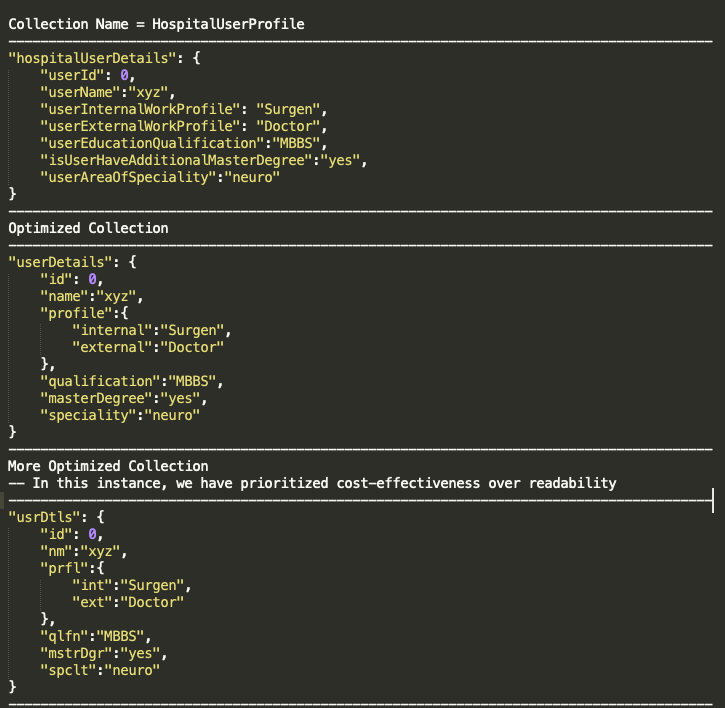

Keep Shorter Keys

- Given that Mongo DB is a Json document-based database, it is essential to ensure the key size is optimal.

- It should be readable, but make sure not to opt exceedingly long key. As these keys will be repetitive in each document you store, this could result in occupy more space and you would end up in paying extra cost.

- Refer to the attached image for an illustrative scenario.

Remove Unnecessary or Duplicate Fields

- If your collection is a legacy collection, then keep an eye on the fields which are not used in current flow and if any then do remove such fields.

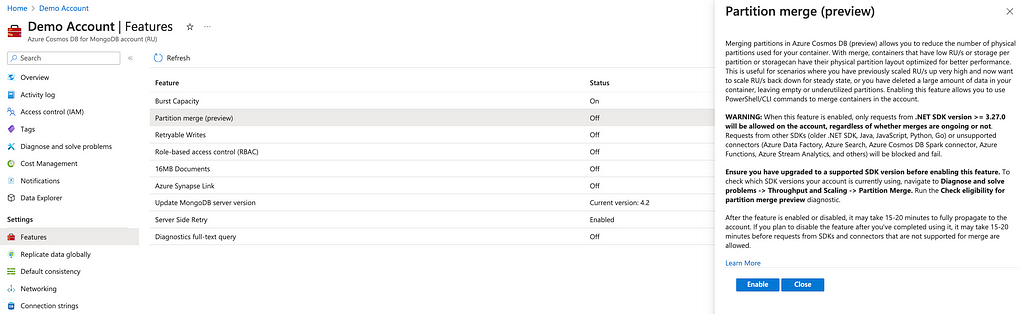

Optimise Physical Partition Usage

- Below are few techniques which can be handy in reducing the already created physical partitions.

Merge Partitions

- If you observe that your physical partition has significantly less data (35 GB), do merge partition which is in preview mode.

- For more details on merge partition steps please visit cosmos official website Merge partitions in Azure Cosmos DB (preview).

Recreate the Collection

- If your org does not support partition merge, work around is to create another collection and copy data to new collection this will reduce of partition based on current data size.

- When you plan to migrate or ingest a large amount of data into Azure Cosmos DB, it’s recommended to set the RU/s of the container so that Azure Cosmos DB pre-provisions the physical partitions needed to store the total amount of data you plan to ingest upfront. Otherwise, during ingestion, Azure Cosmos DB may have to split partitions, which adds more time to the data ingestion.

- We can take advantage of the fact that during container creation, Azure Cosmos DB uses the heuristic formula of starting RU/s to calculate the number of physical partitions to start with.

- Calculate the number of physical partitions you’ll need using this formula Number of physical partitions = Total data size in GB / Target data per physical partition in GB

- Calculate the number of RU/s to start with for all partitions using this formula Starting RU/s for all partitions = Number of physical partitions * Initial throughput per physical partition.

- Note that Initial throughput per physical partition is 10,000 RU/s per physical partition for auto scale and 6000 RU/s for manual

Periodically Delete Stale Data

- If you have requirement to delete data that is older than x period, use TTL feature supported by Azure.

- If you need to delete data based on certain custom logic periodically, do this at as high frequency as possible. As max size of the physical partition is 50 GB. Once partition size crosses this limit new partition is created. However, after deleting data even if size of partitions decrease, partitions are not merged.

- Please refer these documents from Microsoft Azure for more details and optimisation technique on physical partition. Scaling Best Practices, Partitioning Overview

Conclusion

In summary, gaining a solid understanding of its billing structure and capacity management choices allows you to select the most cost-effective and resource-efficient methods. Furthermore, refining document size and physical partition utilisation can contribute to cost savings and enhanced performance.

Implementing the approaches and best practices outlined in this guide will enable you to optimise your use of Azure Cosmos DB while controlling expenses. Keep yourself informed about the latest developments and enhancements in the platform to continuously fine-tune your data management practices, ensuring optimal performance and cost efficiency. Wishing you success on your development journey!

Azure Cosmos for MongoDB Optimization Techniques was originally published in Walmart Global Tech Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.

Article Link: Azure Cosmos for MongoDB Optimization Techniques | by Shriganesh bhat | Walmart Global Tech Blog | Feb, 2024 | Medium