Dongge Liu, Jonathan Metzman, Oliver Chang, Google Open Source Security Team

Since 2016, OSS-Fuzz has been at the forefront of automated vulnerability discovery for open source projects. Vulnerability discovery is an important part of keeping software supply chains secure, so our team is constantly working to improve OSS-Fuzz. For the last few months, we’ve tested whether we could boost OSS-Fuzz’s performance using Google’s Large Language Models (LLM).

This blog post shares our experience of successfully applying the generative power of LLMs to improve the automated vulnerability detection technique known as fuzz testing (“fuzzing”). By using LLMs, we’re able to increase the code coverage for critical projects using our OSS-Fuzz service without manually writing additional code. Using LLMs is a promising new way to scale security improvements across the over 1,000 projects currently fuzzed by OSS-Fuzz and to remove barriers to future projects adopting fuzzing.

LLM-aided fuzzing

We created the OSS-Fuzz service to help open source developers find bugs in their code at scale—especially bugs that indicate security vulnerabilities. After more than six years of running OSS-Fuzz, we now support over 1,000 open source projects with continuous fuzzing, free of charge. As the Heartbleed vulnerability showed us, bugs that could be easily found with automated fuzzing can have devastating effects. For most open source developers, setting up their own fuzzing solution could cost time and resources. With OSS-Fuzz, developers are able to integrate their project for free, automated bug discovery at scale.

Since 2016, we’ve found and verified a fix for over 10,000 security vulnerabilities. We also believe that OSS-Fuzz could likely find even more bugs with increased code coverage. The fuzzing service covers only around 30% of an open source project’s code on average, meaning that a large portion of our users’ code remains untouched by fuzzing. Recent research suggests that the most effective way to increase this is by adding additional fuzz targets for every project—one of the few parts of the fuzzing workflow that isn’t yet automated.

When an open source project onboards to OSS-Fuzz, maintainers make an initial time investment to integrate their projects into the infrastructure and then add fuzz targets. The fuzz targets are functions that use randomized input to test the targeted code. Writing fuzz targets is a project-specific and manual process that is similar to writing unit tests. The ongoing security benefits from fuzzing make this initial investment of time worth it for maintainers, but writing a comprehensive set of fuzz targets is an tough expectation for project maintainers, who are often volunteers.

But what if LLMs could write additional fuzz targets for maintainers?

“Hey LLM, fuzz this project for me”

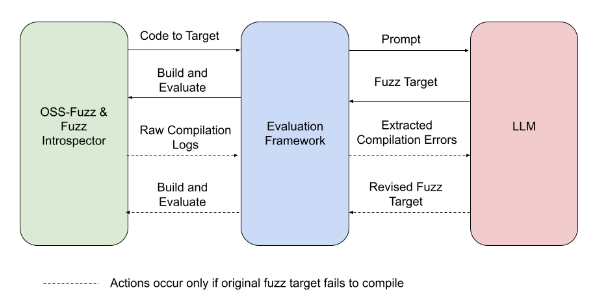

To discover whether an LLM could successfully write new fuzz targets, we built an evaluation framework that connects OSS-Fuzz to the LLM, conducts the experiment, and evaluates the results. The steps look like this:

OSS-Fuzz’s Fuzz Introspector tool identifies an under-fuzzed, high-potential portion of the sample project’s code and passes the code to the evaluation framework.

The evaluation framework creates a prompt that the LLM will use to write the new fuzz target. The prompt includes project-specific information.

The evaluation framework takes the fuzz target generated by the LLM and runs the new target.

The evaluation framework observes the run for any change in code coverage.

In the event that the fuzz target fails to compile, the evaluation framework prompts the LLM to write a revised fuzz target that addresses the compilation errors.

Experiment overview: The experiment pictured above is a fully automated process, from identifying target code to evaluating the change in code coverage.

At first, the code generated from our prompts wouldn’t compile, however after several rounds of prompt engineering and trying out the new fuzz targets, we saw projects gain between 1.5% and 31% code coverage. One of our sample projects, tinyxml2, went from 38% line coverage to 69% without any interventions from our team. The case of tinyxml2 taught us: when LLM-generated fuzz targets are added, tinyxml2 has the majority of its code covered.

Example fuzz targets for tinyxml2: Each of the five fuzz targets shown is associated with a different part of the code and adds to the overall coverage improvement.

To replicate tinyxml2’s results manually would have required at least a day’s worth of work—which would mean several years of work to manually cover all OSS-Fuzz projects. Given tinyxml2’s promising results, we want to implement them in production and to extend similar, automatic coverage to other OSS-Fuzz projects.

Additionally, in the OpenSSL project, our LLM was able to automatically generate a working target that rediscovered CVE-2022-3602, which was in an area of code that previously did not have fuzzing coverage. Though this is not a new vulnerability, it suggests that as code coverage increases, we will find more vulnerabilities that are currently missed by fuzzing.

Learn more about our results through our example prompts and outputs or through our experiment report.

The goal: fully automated fuzzing

In the next few months, we’ll open source our evaluation framework to allow researchers to test their own automatic fuzz target generation. We’ll continue to optimize our use of LLMs for fuzzing target generation through more model finetuning, prompt engineering, and improvements to our infrastructure. We’re also collaborating closely with the Assured OSS team on this research in order to secure even more open source software used by Google Cloud customers.

Our longer term goals include:

Adding LLM fuzz target generation as a fully integrated feature in OSS-Fuzz, with continuous generation of new targets for OSS-fuzz projects and zero manual involvement.

Extending support from C/C++ projects to additional language ecosystems, like Python and Java.

Automating the process of onboarding a project into OSS-Fuzz to eliminate any need to write even initial fuzz targets.

We’re working towards a future of personalized vulnerability detection with little manual effort from developers. With the addition of LLM generated fuzz targets, OSS-Fuzz can help improve open source security for everyone.

Article Link: Google Online Security Blog: AI-Powered Fuzzing: Breaking the Bug Hunting Barrier