AI-powered conversational assistants have found significant roles in our daily lives. In 2019, at Walmart Labs, we built our own AI shopping assistant that aims to help the customers shop their daily groceries on an end-to-end platform. For more information, see our previous posts. Most chatbot systems are good at single-turn or open-domain conversations. However, domain-specific multi-turn conversational systems have a wider range of challenges. These types of chatbots are called Multi-turn Task-oriented Dialogue Systems [4,8].

The retail domain is well-suited for task-oriented chatbots and assistants, for some examples of retail chatbots see here. In retail, there exist numerous terms that are rarely present in the public corpora or if they do, they carry a different meaning. For instance, for the query “buy chips ahoy”, the assistant needs to understand that “chips ahoy” is a brand for cookies. This makes it challenging to use the out-of-shelf pre-trained NLP model for these domains without re-training or fine-tuning them on domain-specific corpora.

Our shopping assistant is heavily search-oriented; meaning that users usually invoke our assistant to buy or re-order a product by starting a search query and spending the next few rounds filtering the results. Thus, we continue this article with an overview of significant differences between product search in the web or app vs product search in conversational assistants.

Product Search in Conversational Assistants

In traditional web/app-based search systems, the users are presented with several items on multiple pages. They are also equipped with multiple checkbox-filtering capabilities and/or sliding buttons that help them interact with the items and exclude some of the results. On a conversational assistant or chatbot, users can not scroll through a large list of product search results. So, getting the top few search results relevant (Precision @ k or P @ k) is necessary, especially the top result (P @ 1).

In a conversational system, a substantial portion of the information is hidden in a spoken format, e.g., text, speech, or even in combination with other formats like images, etc. Thus, the system needs to utilize the user’s spoken feedback instead of relying on the checkbox or set-range buttons. For example, on a traditional system, the user can easily exclude a brand either visually or by unchecking that brand from the list of the brands on the left side of the page. But, on a conversational system, the user has to say/type an utterance like “buy it from a different brand” to get a similar result.

To set a product price, as a top interacted attribute, we see set-range buttons on the app or the web search engines that can fulfill this purpose for the users. But on a conversational system, the users have to say something like “I want something less than $20”. Moreover, in the first scenario, as soon as you click on the set-range button, the system will realize that what you are filtering (price, here). But in the second scenario (i.e. Conversational Systems), it is totally the system’s responsibility to figure out that “price” is the aspect (facet) of the product that the user is trying to set, without saying it directly. In other words, the system should also infer the aspect the user is modifying. For instance, for the query “I want a larger one”, the inferred aspect is size.

In the traditional systems, the users type or say the exact attributes that they prefer whereas, in a conversational system, the users sometimes tend to correct the assistant first and then mention the attribute(s) they prefer. For example, consider this query: “Not half a gallon, get it in a 1-gallon jug, please” in which the user is still talking about the previous product. In other words, the traditional retail search systems are not contextual. They do not remember the last product the user searched for, but in the conversational system, maintaining the context through multi-stage conversation while refining the search results is expected and is an essential and challenging task. If a user starts a conversation by searching for “diapers” and then spends the next few rounds to refine the results by updating different attributes of the item such as brand and size, then the context should be updated each time without hurting the user’s preferences. This challenge for dialogue systems has created a rich area of research called “Dialogue State Tracking”, in the field of Natural Language Processing (NLP) ([1,3,6,7,8].

Now, in the existence of the aforementioned differences and challenges, the question is “how can we enable our system to provide a seamless shopping experience for our customers?”. As many of the queries are about searching for a product, our goal of building a seamless shopping experience finds its roots in offering a powerful and human-like search filtering system over a conversational platform. This is what we call a Conversational Search Refinement System and it will be the topic of the next section.

What is Conversational Search Refinement?

In this article, we want to present our approach towards understanding and addressing Search Refinement (SR) queries. These are the spoken queries that our users utilize to modify the previous search results. We try to touch some parts of this problem regarding product search with two examples:

Example 1 (Success).

User > Search for size 4 Pampers diapers.

Assistant > I can get you Pampers Baby-Dry Extra Protection Diapers, Size 4, 92 Count for $26.70. Add a box to your cart?

User > I want a smaller one.

Assistant > I can also get you Pampers Swaddlers Diapers, Soft and Absorbent, Size 4, 66 Ct for $24.94. Add 1 to your cart?

User > Perfect, add it to my cart.

This example is a successful SR scenario because, in the second round, the system kept the “Pampers” brand while offering a smaller item, and also the user agreed with it.

Example 2 (Failure).

User > I want organic whole milk.

Assistant > You can get Horizon Organic Whole DHA Omega-3 Milk, Half Gallon for $4.46. Add 1 to your cart?

User > I want it in a larger jug from a different brand.

Assistant > I can get you Organic Valley Ultra Pasteurized Organic Whole Milk, 128 oz for $6.97. Add 1 to your cart?

User > Buy it from Great Value this time.

Assistant > How about Great Value Whole Milk, 1 Gallon, 128 Fl. Oz for $3.23?

User > I asked for organic!

This SR scenario is not fully successful. After the first search query, the conversation goes well until the end of the second response from the assistant, but in the third round, the offered product is not organic and the user complains about it.

Now, that we have given some examples of different SR scenarios, we start presenting our approach with some definitions. We define the following categories for the search refinement (SR) queries.

Definitions

Explicit Refinements: The filter attributes are explicitly mentioned. For instance, see the following (unrelated) queries:

- I want 2% fat Great Value milk; when the previous query was “search for milk”.

- I want it in the red color [previous query: “search for men’s t-shirts”].

- Buy it from Great Value [previous query: “add toilet paper”].

Implicit Refinements: The filter attributes should be concluded from previously suggested item(s), the user’s query, and the context:

- Can you make it to a larger size, please?

- I do not like this brand.

- I want a different color.

- Show me the cheapest one.

- Show me the earliest available time slot for delivery

Our Approach

There has been rich literature on the Conversational Recommender Systems, see [5] for a survey. Among numerous related works, we particularly intercept [2]. We deployed our SR system several months before this paper was published. In [2], the authors perform exact value search refinement on brand, color, discount, material, price, and review rating. Moreover, some previous work is based on categorizing the refinements queries using pre-designed intents such as ‘EXACT, EXCLUDE, RANGE, GREATER, LOWER, OTHER’ (e.g. [2]). Then the predicted intent is utilized to determine the filtering action.

Our approach is based on using only one intent for EXACT, RANGE, GREATER, LOWER, and EXCLUDE (partially, e.g., “different brand”) with another intent for negations as the rest of exclusions (“I do not want this brand”). We present our solution that first understands user’s refinement queries and then transforms them into catalog-oriented filters similar to the ones that are prevalent on web-based search engines. We fulfill this goal by taking the following steps.

Attribute Extraction (Step 1): We first understand the product, its attributes, any facets that the user is trying to modify, and the way the user wants to modify them. We do this by leveraging a Transformer-based model using BERT architecture (2 Layers) to perform a Sequence Tagging Task using the BILOU-tagging system. For example, for the query “I want a larger size”, the facet would be “size” and “larger” will be the way the user modifies this facet (i.e. increasing).

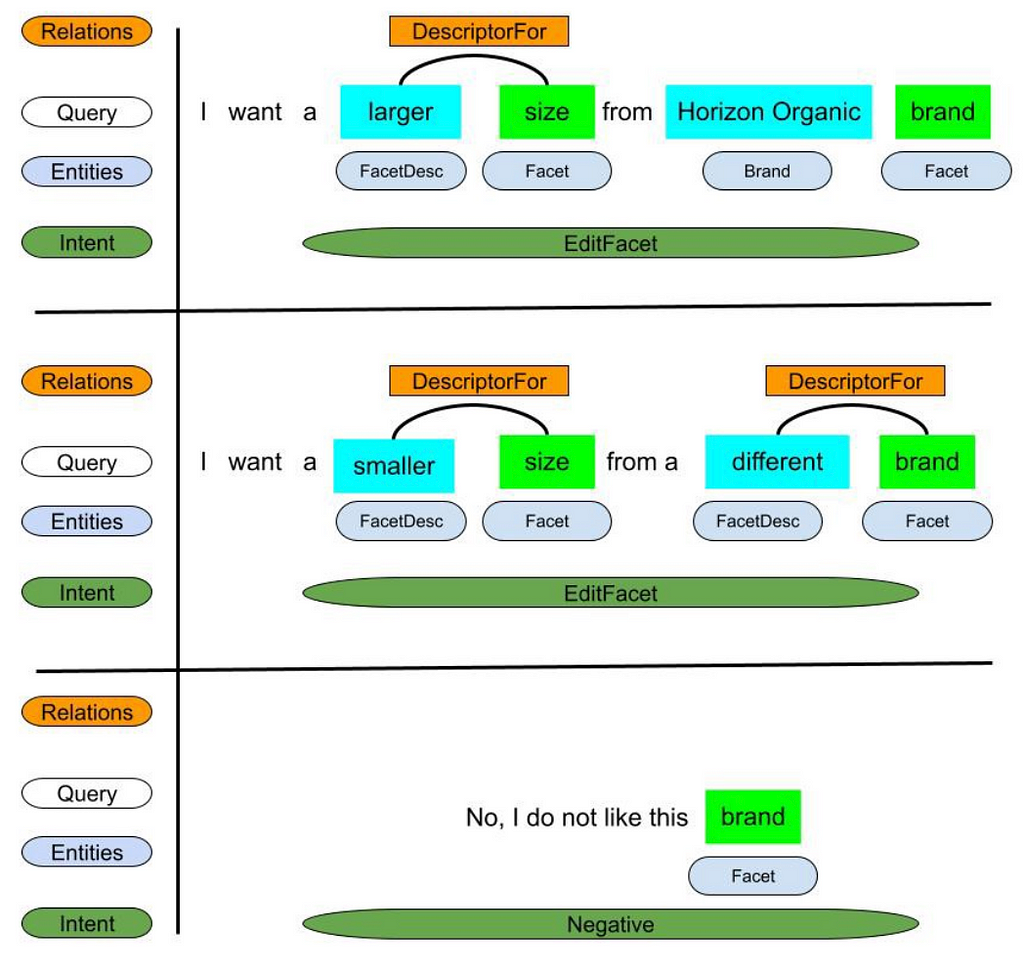

Relation Extraction (Step 2): Now, we need to derive the appropriate actions (increase, decrease, exclude, etc.) for the facets. We first capture the facet descriptors using a new entity tag called ‘FacetDesc’, see Figure 1 for more examples. We introduce a Relation Extraction (RE) module to remove ambiguity between entities and connect the facet descriptors to the corresponding facets.

In the picture below, we depict how we are extracting the facets, and facet descriptors along with other entities. The arcs tell us what facet descriptors are for what facets.

Figure 1. How relations among entities help better understand the queries.

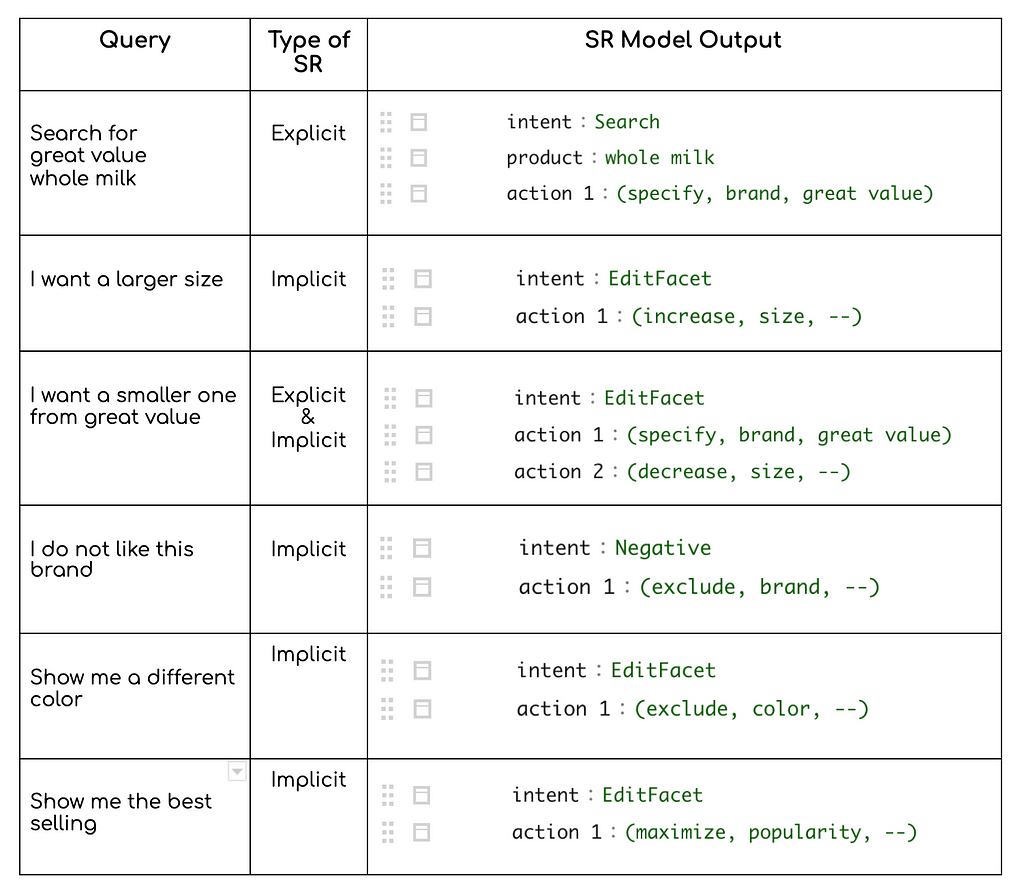

Figure 1. How relations among entities help better understand the queries.Action Inference (Step 3): Finally, we utilize the resulting intent, entities, facets, and relations to infer the next actions for the Dialogue Manager (DM). The deduced action(s) can be seen in the last column in the picture below. Each action is a triple (Action-Type, Facet, Value), where “Value” is only applicable to some specific actions (“Specify”).

Figure 2. Inferring actions from relations, intents, and entities

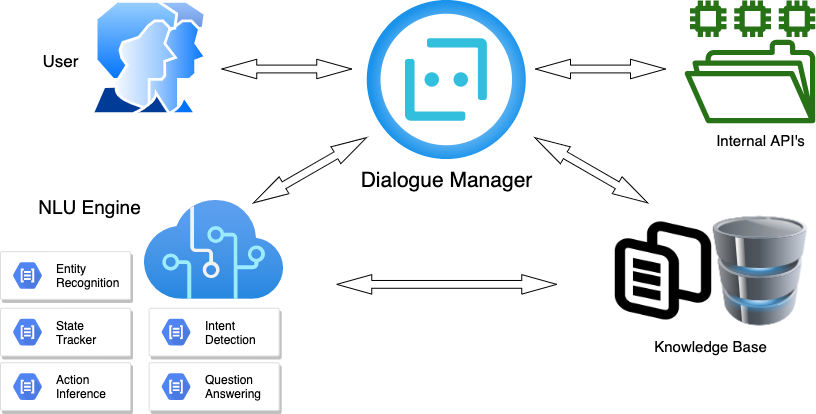

Figure 2. Inferring actions from relations, intents, and entitiesFor the end-to-end experience, we have depicted the flow in Figure 3 for how our conversational assistant will process an input query. We have also included the Knowledge Base (KB) integration for several future purposes such as providing suggestions (for a query like “I want to see more options”) and Question Answering use cases.

Figure 3. The flow for our conversational shopping assistant.

Figure 3. The flow for our conversational shopping assistant.Now, we present the novelty of our approach:

- We designed our ML system to support many of the attributes mentioned in [2] along with size. The ‘size’ attribute is challenging for the products with several individual items inside the whole package (water bottles, soda, toilet papers, etc.).

- In contrast to some of the previous work, we use two super-intent ‘EditFacet’ and ‘Negative’. We determine the next flow using an array of triple(s) (Intent, Facet, Action), where the Action is derived from our Relation Extraction (RE) module. We also considered assigning fine-grained intents, like [2], to capture the filtering action, but we realized that it does not generalize as the queries can carry several filtering intents: “I want to buy a larger one from great value” (EXACT intent and GREATER intent using [2] notations) or “not horizon organic, buy it from Great Value this time” (EXCLUDE intent and EXACT intent). This RE module covers many of these edge cases.

- Using a single-intent approach along with our nest set of entities, we can fully support comparative and superlative search refinement queries: “show me the bestselling option” or “show me a smaller one”.

Experiments

We launched this Search Refinement (SR) model for our shopping assistant (Siri shortcuts, Google Assistant) in late 2020. Our SR model supports many product attributes such as brand and size. For the sake of convenience, we present a list of SR queries with the model’s outputs for them with slight modifications to remove details of the model’s API footprint.

Figure 4. SR Model outputs for a batch of queries

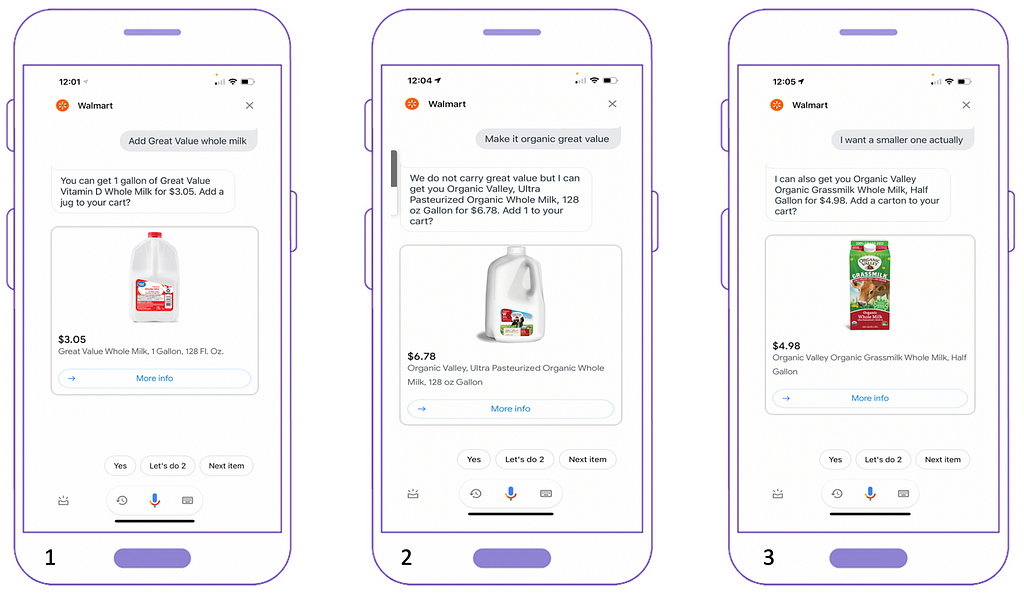

Figure 4. SR Model outputs for a batch of queriesWe also present a scenario for an end-to-end shopping experience in pictures 1–3 below, where the user is refining the search results for shopping “milk”.

Figure 5. A real-world successful experience through our SR system

Figure 5. A real-world successful experience through our SR systemConclusion and Future Work

In this work, we enabled our system to address implicit search refinement queries and we enhanced it to understand the explicit search refinement queries as well. We did this by

- Introducing new entities to understand the facets of the products and the way the user wants to edit.

- Adding a Relation Extraction (RE) module that helps infer the next action using the intent, entities, and relations as inputs.

Since the time we deployed the search refinement module to our production environment, user engagement and satisfaction have increased due to significant improvement in our P@1. We want to add more semantics to our models in the next phases to capture other scenarios such as queries carrying several entity values from the same entity type.

References

[1] Chen, Lu, et al. “Schema-guided multi-domain dialogue state tracking with graph attention neural networks.” Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 34. №05. 2020.

[2] Filice, Simone and Castellucci, Giuseppe and Collins, Marcus and Agichtein, Eugene and Rokhlenko, Oleg, “VoiSeR: A New Benchmark for Voice-Based Search Refinement”, EACL 2021.

[3] Gao, Shuyang, et al. “From machine reading comprehension to dialogue state tracking: Bridging the gap.” arXiv preprint arXiv:2004.05827 (2020).

[4] Ham, D., Lee, J. G., Jang, Y., & Kim, K. E. (2020, July). End-to-end neural pipeline for goal-oriented dialogue systems using GPT-2. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics (pp. 583–592).

[5] Jannach, Dietmar, et al. “A survey on conversational recommender systems.” arXiv preprint arXiv:2004.00646 (2020).

[6] Pang, Wei, and Xiaojie Wang. “Visual dialogue state tracking for question generation.” Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 34. №07. 2020.

[7] Ren, Liliang, et al. “Towards Universal Dialogue State Tracking.” Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing. 2018.

[8] Yan, Zhao, et al. “Building task-oriented dialogue systems for online shopping.” Proceedings of the AAAI Conference on Artificial Intelligence. Vol. 31. №1. 2017.

Understanding Conversational Search Refinement Queries in Walmart Shopping Assistant was originally published in Walmart Global Tech Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.