Symantec EDR Internals — Criterion

In the recent weeks I’ve been doing some research into Symantec EDR and looking into the technologies that are used to generate the incidents and events inside of the platform. In the hope to get a better understanding of the detection process and mechanisms within.

Seeing a file classified as malicious or an incident being declared is all good and well. But without providing context to the analyst or the one looking at these incidents it quickly becomes a game of blind trust and in my opinion if we’re writing detections or responding incidents we need all the context and information we can get.

If we take a look at the documentation of Symantec EDR we find the following page that cites all the technologies that SEDR uses to detect and generates events.

The technologies that detect Symantec EDR events

The list contains about 10 technologies, ranging from signature based and reputation to heuristics and machine learning based approaches.

In this new series of blog posts, I’ll be taking a look at the inside working of these technologies and provide some context and meaning behind the detection.

Criterion

The first technology that i was interested in was the machine learning engine “Criterion”. Here is a small description from the documentation

Criterion detects files in the gray region between known good and known bad. In this range, Criterion detects the files that are more likely to be malicious.

We also have this description of the “Suspicious file classifier” technology used by SEDR

Symantec EDR uses a file classifier to analyze files with unknown dispositions. The file classifier breakdowns files by their attributes to determine if the file is good or malicious, based on decision trees that are trained with millions of files.

This technology uses machine-learning instead of signatures or sandbox detonation.

From this we can deduce that this engine extract some attributes or features from a file and then passes everything to a classifier that’ll spit out a result.

From within EDR itself when a file is analyzed or classified by criterion we get one of the following dispositions.

- Good: The file is in the allow list or Symantec’s reputation service indicates that the file is good.

- Suspicious: The file’s health is suspicious based on Symantec’s machine-learning algorithms that identify the files that are likely to be malicious. However, there is no known detection against it. Your organization should pay particular attention to and thoroughly analyze suspicious files.

With this in mind let’s dive in.

Start Of The Journey

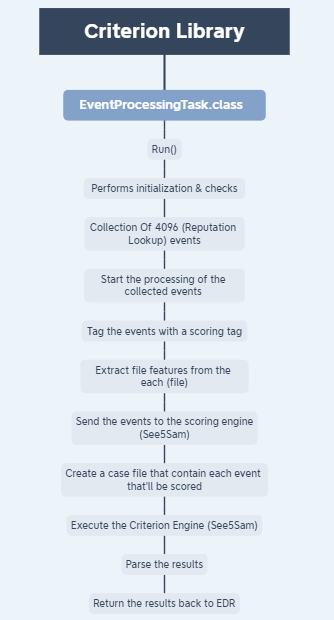

Looking through the system we find that the criterion engine / logic is located in a library called “criterion.jar” and within it is a class titled “EventProcessingTask” which implements a “run()” function which is responsible for collecting all the information needed by criterion to execute properly. Below is the general gist of how this function works.

Note : The “Run” function only start the process. Multiple function calls are made within this library to perform the necessary tasks. This diagram is only an abstract representation of the general flow of how things work.

General Flow

General FlowThis library performs a lot of tasks. The “initialization” process alone contain multiple function calls. Below are some of the actions performed at the start :

- Creating and initializing the lists that’ll contain the “suspiciousEvents” and “unscoredEvents”.

- Collecting time range (Start and End time).

- Creating Lists for any blacklist or whitelist exclusions you have within the EDR. (These will be used to filter in / out events)

- Verifying that variables and functions are not returning “NULL” results

- …Etc.

Once these steps are finished. A function call is made to collect all the “4096” (Reputation Lookup) events in a specific time range and sent to criterion for processing.

Determining The Scoring Decision

The “Criterion” engine will then take those events and tag them in one of two categories

Scored

A “scored” event is tagged as such depending on the value of its “disposition” and “confidence” and can have one of the following states:

- DEFAULT_SCORE (0)

- SCORE_USING_ENGINE

Unscored

An “unsocred” event is tagged as such if one of the following conditions is met:

- The event is equal to “NULL”.

- The file extension is not in the list of the acceptable classifier extensions: “exe”, “cpl”, “dll”, “msi”, “scr”, “sys”.

- The file path is in one of the following paths: “CSIDL_PROGRAM_FILES” or “CSIDL_PROGRAM_FILESX86”.

- The file doesn’t have a “confidence” or a “disposition”.

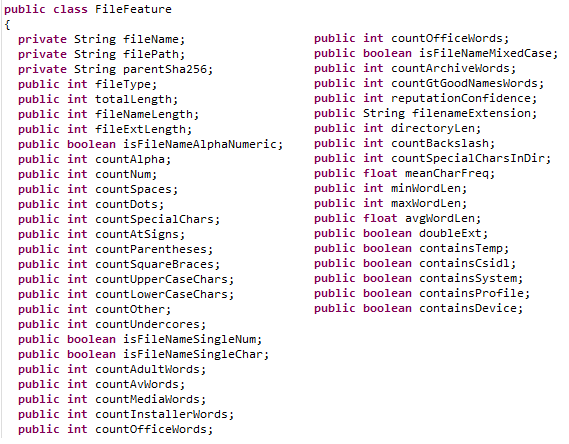

Populating File Features

Once the files / events are tagged with a score/unscored tag. The next thing to look at are the file “features”. Since the engine bases its classification on file attributes and features, it needs to collect this information before sending it to the ML classifier.

For this a list of file features is created that’ll contain different information about the files attributes. Events tagged with the “SCORE_USING_ENGINE” are the ones concerned with this collection.

Attributes & Features Collection

List of “File Features” and “Attributes” to collect

List of “File Features” and “Attributes” to collectFor every event (“SCORE_USING_ENGINE” or “DEFAULT_SCORE”) the following attributes will be collected :

- File Name

- File Path

- SHA256 Hash

- Reputation Confidence

In the case of events tagged with the “SCORE_USING_ENGINE” additional information will be collected through the usage of a function called “populateParsedFeatures”. Where the following information will be checked and collected:

- File Type — Its extracted by verifying if the filename contains one of the following strings : “.exe”, “.src”, “.dll”, “.msi”, “.msp”, “.php”, “.js”, “.html”, “.ocx”, “.zip”, “.tmp”, “.rb”, “.rar”

- Total Length — Calculated from the length of the file.

- Filename Length — Calculated from the beginning of the file until the last “point” denoting the beginning of the extension.

- File Extension Length — Calculated from the last “dot” until the end of the filename.

- Count Lower Case Characters — Calculated by looping through the file name and checking if any character is between the range of letters from “a” to “z” (lower case). If it is then the counter is incremented by one.

- Count Upper Case Characters — Calculated by looping through the file name and checking if any character is between the range of letters from “A” to “Z” (upper case). If it is then the counter is incremented by one.

- Count Alpha — Calculated by looping through the file name and checking if any character is between the range of letters from “a” to “z” (lower or upper case). If it is then the counter is incremented by one.

- Count Numbers — Calculated by looping through the file name and checking if any character is between the range of numbers from “1” to “9”. If it is then the counter is incremented by one.

- Count Spaces — If the there is a space in the filename, then the counter is incremented by one.

- Count Dots — If the filename contain a dot, then the counter is incremented by one.

- Count At Signs — If the file name contains the “@” symbol, then the counter is incremented by one.

- Count Special Characters — If the filename contains one of the following (“%”, “-”, “^”, “#”, “$”, “*”, “!”) symbols then the counter is incremented by one.

- Count Parentheses — If the file name contains parentheses, then the counter is incremented by one.

- Count Square Braces — If the file name contains square braces (“[“, “]”) then the counter is incremented by one.

- Count Underscores — If the file name contains and underscore (“_”) then the counter is incremented by one.

- Count Other — If none of the aforementioned conditions are matched then the other counter is incremented by one

- isFileNameAlphaNumeric — Boolean that is set to “True” if both “Count Alpha” and “Count Numbers” are both superior than zero

- isFileNameSingleNum — Boolean that is set to “True” if “Filename Length” and “Count Numbers” are both equal to one

- isFileNameSingleChar — Boolean that is set to “True” if “Filename Length” and “Count Alpha” are both equal to one

- isFileNameMixedCase — Boolean that is set to “True” if “Count Upper Case Characters” and “Count Lower Case Characters” are both bigger than zero

- countAdultWords — A counter that start from zero and add one each time one of the following words are found : “sex”, “xxx”, “hot”, “porn”, “adult”, “baby”, “babe”, “sweet”, “slut”, “fuck”, “dog”, “horse”

- countAvWords — A counter that start from zero and add one each time one of the following words are found : “scan”, “virus”, “threat”, “risk”, “malware”, “malicious”, “av”, “protect”, “safe”, “save”, “secure”, “security”, “anti”

- countMediaWords — A counter that start from zero and add one each time one of the following words are found : “avi”, “video”, “fli”, “movie”, “wav”, “media”, “midia”, “vivo”, “mpeg”, “vid”

- countInstallerWords — A counter that start from zero and add one each time one of the following words are found : “install”, “setup”

- countOfficeWords — A counter that start from zero and add one each time one of the following words are found : “.doc”, “.xls”, “.ppt”, “.pdf”

- countArchiveWords — A counter that start from zero and add one each time one of the following words are found : “rar”, “zip”, “lzh”

- countGtGoodNamesWords — A counter that start from zero and add one each time one of the following words are found : “java”, “jsched”, “lsass”, “svchost”, “csrss”, “acrord”

- doubleExt — A boolean that is set to true if the filename matches a regular expression that checks for double extensions.

- directoryLen — Variable containing the length of the directory

- countSpecialCharsInDir — A counter that starts from zero and increment each time the name of the directory contain one of the following characters : “%”, “-”, “^”, “#”, “$”, “*”, “!”

- countBackslash — A counter that starts from zero and increment each time the name of the directory contains a backslash

- meanCharFreq — This is equal to “directoryLen” / “countOfUniqueCharacters”

- maxWordLen — Calculated based on the longest word in the directory name

- minWordLen — Calculated based on the shortest word in the directory name

- avgWordLen

- avgWordLen

- containsTemp — Boolean set to “True” if the directory contains the word “temp”

- containsCsidl — Boolean set to “True” if the directory contains the word “csidl”

- containsSystem — Boolean set to “True” if the directory contains the word “system”

- containsProfile — Boolean set to “True” if the directory contains the word “csidl_profile”

- containsDevice — Boolean set to “True” if the directory contains the word “device”

All of this will sum up to about 45 “feature” being calculated and assigned.

Calculating The Final Score

Once all this is done its time to send the events to the ML Scoring Engine. For this a case file is created with the following format (See below)

Sample_X,0,0,0,0,5,1,0,0,0,0,0,0,0,0,3,0,5,0,0,2,0,0,0,500,0,0,0,0,0,30,1,0,1,11,11,29,0,1,1,0,0,8,8,0,0,0,0,0,0,?

Where each line in this case file will represent a “suspicious” file and its features.

This will get sent to the “See5Sam” engine which is the ML classifier that’ll take the case file as input and outputs a score between 0 and 1 indicating if the file is “Good” or “Bad”

Result of the “See5Sam” engine

Result of the “See5Sam” engineThe results will then get parsed and sent back to SEDR in the form of Event ID “4099: Suspicious File Detection”

File classified as “Suspicious” by “Criterion”

File classified as “Suspicious” by “Criterion”Conclusion & Future Research

In this first part we looked at the general flow of how the “Criterion” library / engine works. What are the required conditions for files to be considered for scoring and what are the attributes and features that are need for to help in the classification of these files. Defenders can use this information to gain a deeper understanding of the EID 4099 and to write more informed detection within SEDR using the aforementioned EID.

As for future research. I’m currently investigating other detection technologies and internal features within SEDR and i’ll share more details when it’s possible :D

I hope you enjoyed reading this blog and as always if you have any comment or feedback please send it my way on twitter @nas_bench

Article Link: Symantec EDR Internals — Criterion | by Nasreddine Bencherchali | Medium