What is multi-cloud deployment?

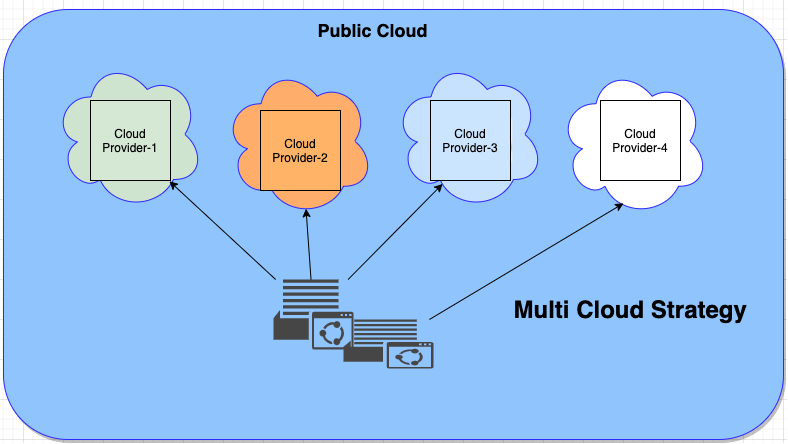

Multi-cloud is a preferable cloud strategy in today’s modern cloud computing world. A multi-cloud strategy enables enterprises to choose cloud services from two or more cloud providers. Most of the organizations are using the services from more than one public cloud provider for their variety of use cases. Multiple cloud providers are available in the market today. They provide a variety of options for computing resources and services. A multi-cloud approach is often linked to achieving the following capabilities:

- The right cloud for the right service

- Outages avoidance

- Vendor Lock-in avoidance

- Best-of-Breed approach

Some of the facts

Enterprises are spending huge money on cloud services, the bigger question here is — are enterprises spending wisely, optimally? The real worries start when the monthly bills start to come in. Cost optimization and low latency become top priorities for enterprises if they want to truly utilize multi-cloud benefits. The 360° resource monitoring view plays a very critical role in multi-cloud environments.

Research firm Gartner expects global spending on public cloud infrastructure (IaaS) alone to total $39.5 billion in 2019. Meanwhile, IDC predicts public cloud spending (including IaaS, SaaS, and PaaS) to approach $500 billion in 2023.

However, cloud cost management vendor ParkMyCloud, using Gartner’s $39.5 billion IaaS figure as a starting point, estimates that companies will waste more than $14 billion on unnecessary public cloud infrastructure spending this year — and the firm thinks that figure is “probably low.”

Let us see what we can do to not contribute to the ‘$14 billion’ number.

Optimize multi-cloud cost

- Avoid over-provisioning of the cloud resources: Elasticity is one of the biggest advantages of cloud computing, infrastructure can grow and shrink based on the application demand and load. The development teams often overprovision the resources to ensure performance and scalability. The best practice is to set up auto-scaling on the cloud resources wherever it is possible.

- Split the data between Hot and Cold Storage layer: In the digital era of cloud computing and the Internet of Things, systems and applications are bound to deal with the extensive volume. Divide the data between Hot and Cold storage layer. Keep the frequently accessed data in Hot Storage like SQL/NoSQL databases. Move the infrequently accessed data in Cold Storage like file and object Storage. Generally, the Tier 1 databases cost 100 times more than the file storage.

- Monitor and cleanup resources: It is essential to monitor the cloud resource utilization and configuration on regular basis, cleanup the unused resources, and remove any storage volumes that are no longer in use. We also need to ensure automatic monitoring and alerting processes in place so that suitable action can be taken on time. Cloud operations or Infra management teams may be unable to avoid cloud resource wastage without tools that help them in analyzing data granularly. Proper monitoring can help us in identifying the major cost drivers and also, in making intelligent recommendations to correct the scale of the cloud resources being used.

- Create a common Layer: A single point of operations layer across multiple clouds can provide important visibility and control of an entire infrastructure, managing security better, and gaining insight into resource utilization. Putting the right governance in place can help in lowering the resource wastage, and bring new efficiencies to existing assets by increasing resource utilization.

- Segregate prod and non-prod bills: Often cloud spending on non-prod environments is significant and we don’t keep track of this. It is better to segregate the bills and analyze the cost based on the resource group. We must avoid storing huge data and backups in lower environments and also should provide the bare minimum resource if the load is not huge. Use DevOps for resource provisioning and cleanups.

- Right cloud service for the Right Job: We need to choose the right service across the cloud providers which fit into our job. The field has a lot of competitors. It is essential to understand the use case and specific technical requirements, various cloud providers offer more or less similar managed services for the job but evaluate each equivalent service in terms of Cost, Security, Compliance, Governance, etc.

Achieve low latency in a multi-cloud

Moving data around in the same cloud infrastructure is faster than having that information go across the internet. Network connectivity, through the network devices, is the only way for the various clouds to communicate with one another. This means that network bandwidth and latency rates need to be taken into consideration when working with multi-cloud architectures.

- Avoid Storing large data sets in one cloud: Avoid having large amounts of data stored in one cloud and processed in another. While one cloud storage service might cost less, it is not worth the potential performance issues, and at the same time try to compress data before sending it to another cloud.

- Region Affinity: Most of the big public cloud provider’s data centers are available in many regions across the globe. When Enterprise wants to deploy their workloads in multiple clouds, that’s when the region affinity plays a very crucial role, selecting a region of the second cloud that is closest to your current region deployment is very important to minimize latency. This is a good idea even if you do not need inter-cloud connectivity immediately, but you may need it in the future.

- Deploy workloads close to the users: Moving deployed applications closer to the users and also ensuring the nodes within the application are close together. It is not advisable to host your applications from Cloud A and database from Cloud B. Always evaluate the source location of the ingress traffic and cloud provider data center availability. With the multi-cloud infrastructure, the data center closest to end-users can serve the requested data with minimum latency. This capability is especially useful for global organizations that need to serve corporate data across multiple geographical locations while maintaining a unified end-user experience.

- Proximity-based routing: When you configure GSLB for proximity, client requests are forwarded to the closest data center. The main benefit of the proximity-based GSLB method is faster response times resulting from the selection of the closest available data center. Such deployment is critical for applications that require fast access to large volumes of data. The routing capabilities are offered by most of the public cloud providers.

On one hand, the multi-cloud strategy has its own advantages but on the other hand, it creates specific challenges that organizations need to take into consideration before defining the cloud strategy. Low Cost and latency are two major driving factors to be considered.

As a multi-cloud strategy becomes popular, it’s important for decision-makers to understand the advantages and disadvantages and adopt the Best Of-Breed approach.

Stay tuned for my next post on Multi-Cloud !!

Multi-Cloud Cost and Performance Optimization Strategy was originally published in Walmart Global Tech Blog on Medium, where people are continuing the conversation by highlighting and responding to this story.