When I recently joined Endgame as an intern on the Quality Assurance (QA) team, I was tasked to build a reliable and scalable automated UI testing framework that integrates with our manual testing process. QA automation frameworks of front end code are fraught with challenges. They have to handle frequent updates to the UI, and have a heavy reliance upon all downstream systems working in sync. We also had to handle additional common issues with the browser automation tool “baked in” to our current framework, and overcome any brittleness in the application wherein minor changes could potentially lead to system failure.

Building an automated UI testing framework required extensive research and collaboration. I sought out experienced guidance to determine project approach, conducted comprehensive research to cast a wide net, participated in thoughtful collaboration to determine framework requirements, and applied structured implementation to grade framework performance.

Ultimately, I built three versions, duplicated a set of tests across all three and baked into each a different browser automation tool. The version of the framework with the non-Selenium based tool baked into it was the most performant. This blog post discusses the journey to building our automated UI testing framework, including lessons learned for others embarking on similar paths.

Automated UI Testing Frameworks: What They Are & Why They Matter

In simple terms, a software framework is a set of libraries and tools that allows the user to extend functionality without having to write everything from scratch. It provides users with a “shortcut” to developing an application. Essentially, our automated UI testing framework would contain commands to automate the browser, assertion libraries, and the structure necessary to write UI tests quickly for current and new UI features.

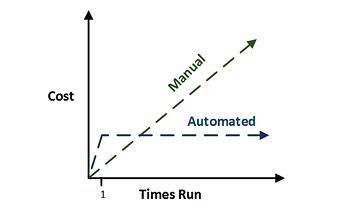

Easier said than done! Automated UI testing, from the point of view of Front End and QA engineers, is notoriously problematic. Unlike other parts of an application, the UI is perhaps the most frequently developed. In an ideal world, we want our automated tests to reflect the chart results below:

Time and Cost Comparison for UI vs. Automated Testing

The chart represents how over time the cost of manual testing increases. With automated tests, there is expense involved in building, integrating, and maintaining the framework, but a well-built automation framework should remain stable even as an application scales.

Of course, there is no substitute for manually testing certain features of the UI. Our goal was not to replace all of our manual tests with automated ones. It is difficult to automate tests that would simulate how a user might experience layout, positioning, and UI rendering in different browsers and screen sizes. In some cases, visually testing UI features is the only way to test with accuracy. Instead, we wanted to increase the efficiency of our UI tests by adding an automation framework that would run stably, consistently, with minimal maintenance, and therefore minimal cost.

Testing Strategy

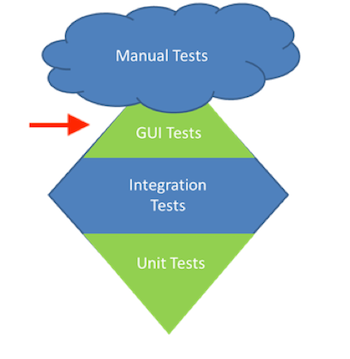

It takes an experienced understanding of software testing and QA to know how to achieve the right balance of manual, UI, integration (API) and unit tests. The testing diamond shown below represents part of our approach to raising our UI testing efficiency. Notice that the number of UI tests is significantly smaller than integration tests.

Testing Diamond

In the early days of web development, an application’s UI was responsible for handling business logic and application functionality in addition to rendering the display. For example, the front end of an application would house SQL queries that would pull data from a database, do the work to format it and then hand it to the UI to render.

Today, most browser-based applications have isolated their business logic to a middle tier of microservices and/or code modules that do that heavy lifting. That all being said, the Endgame QA Team has modeled its testing frameworks in a similar tiered manner. The bulk of the functional testing lives in our middle-tier test framework (written in Python) and exercises each and every API endpoint. Our UI testing framework, no longer burdened with verifying application functionality, can now focus on the UI specifically.

With the bulk of the functional tests being pushed down to different testing frameworks, the scope of the UI test framework shrank significantly. We further reduced the scope by removing the look and feel of the frontend from this testing framework. Is this button centered relative to that box? Is the text overrunning the page? Is the coloring here matching the coloring there? UI test cases can certainly test those things, but at what cost? Spinning up a browser (even a headless one), logging in and navigating to a page costs time. We have a seven-person manual feature regression testing team that interacts with the application every day and can spot such things more efficiently than any test case.

So, what will this UI testing framework test then? We decided to keep it focused on high level UI functionality that would prove that the major pages and page components were rendering and that the application was worthy of the next tier of testing. We would verify that:

- The user could log in through our application’s interface.

- The user could navigate to all of the base pages.

- The user could view components that displayed important data on each of the base pages.

- The user could navigate to sub pages inside any base pages.

Smoke tests check that the most important features of the application work and determine if the build is stable enough to continue with further testing. This approach relieves brittleness because this set of UI tests does not rely on all downstream systems working in sync. There are fewer tests to maintain and since the tests are fairly straightforward, maintenance is manageable. With our test strategy in hand, we began the search for a browser automation tool.

Eliciting Requirements

Collaboration played an important role in identifying the parameters and requirements to guide the design and build of our automated UI testing framework. Our abandoned, non-functional UI framework code served as my primary reference. I first met with its author to discuss pain points and to find out what else was needed to optimize our UI testing. I also regularly attended Front End stand up meetings to share my progress and field questions. Through these discussions it became clear that the end-to-end approach and challenges around how Selenium worked were the major pain points. Now that I had an overall idea of the desired framework and the type of browser automation tool we needed, I began researching frameworks. I cast a wide net to select the top three most highly performant browser automation tools in the industry. I ultimately chose: NightwatchJS, Cypress, and WebdriverIO.

NightwatchJS, is a popular browser automation tool that wraps Selenium and its Webdriver API with its own commands. NightwatchJS includes several favorable capabilities, including auto managing Selenium sessions, shorter syntax, and support for headless testing. NightwatchJS was also recommended by several colleagues and was an obvious starting point.

In selecting the second tool, I researched current advances in UI testing by tapping into my networks. I pinged front end developers and QA engineers on various Slack channels. Many of them advised against Selenium due to difficulties managing browser specific drivers, and setting up and tearing down Selenium servers. Engineers in my networks recommended a new browser automation tool, Cypress, built completely on JavaScript using its own browser automation, not Selenium. Cypress was gaining buzz as an all in one, JavaScript browser automation tool.

To select the final tool, I consulted with our FE team lead. He asked me to build a framework using WebdriverIO. WebdriverIO is currently the most popular and widely used browser automation tool for automated UI testing.

The “Bake-Off”

To measure the framework’s performance as well as the browser automation tool baked into it, I built three versions. Each version contained a duplicate set of smoke tests that verified: components on the login page, login functionality, navigation to all base pages, and components on each of our major functional pages.

To build each version, I used a structured process that consisted of the following steps:

- Get the browser automation tool up and running by navigating to Google and verifying the page title.

- Write test cases for each base page.

- All tests and browser commands were written in the same file.

- I executed the red/green refactor cycle, a process where tests are run and rewritten until it passes.

- Decouple the browser commands from the test code. Our framework architecture replicates the component based structure of our front end codebase. Browser commands were written into its own component helper file duplicating the organization of our UI components. Organizing files in this way allows us to spend minimal time figuring out where to write tests and browser commands.

- Request code reviews from our QA and FE teams and refactor framework code based on their feedback.

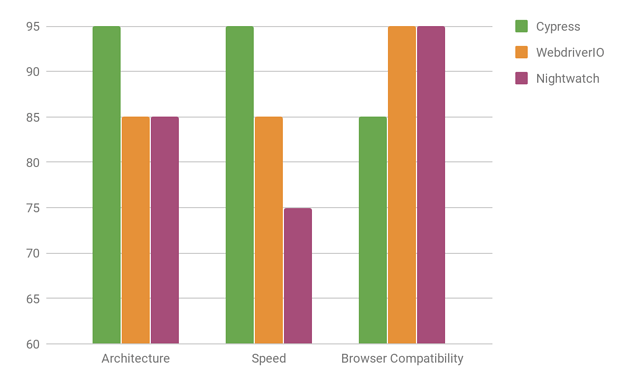

After each implementation, I graded the framework using categories informed by our testing strategy. Since the set of cases was limited to basic UI functionality, we focused on: architecture, speed, and browser compatibility.

Architecture was important to consider to ensure that the new framework would be compatible with our application and its surrounding infrastructure. Our FE team had experienced issues with Selenium, which automates the browser in a specific way. Both the FE and QA teams were open to exploring new automation tools that were architected in a different way.

Speed was paramount to framework performance. We want our tests to run efficiently and fast. To get an accurate measurement, each suite of tests was kicked off from the command line using the Linux time command. Many runs were captured, an average was generated and used for comparison.

We included browser compatibility as a category mainly to share my findings in discussions with the FE team. However, the set of tests we implemented did not rely on it. Users should be able to navigate through base and subpages regardless of browser type. Since Cypress is still new to the industry, WebdriverIO and NightwatchJS scored higher in this category. Fortunately, avid and enthusiastic support for Cypress has encouraged its developers to have a roadmap in place to extend support to all major browsers. In conjunction, we plan on continuing our manual testing process to evaluate our UI’s cross-browser functionality.

Based on these categories, if the framework version, along with its browser tool scored poorly, it received one point. If it scored well, two points. If it scored excellent, three points were given.

I concluded that browser automation tools that did not use Selenium (Cypress) outperformed Selenium-based tools (WebdriverIO & Nightwatch) in the following categories: architecture and speed.

See the Summary Comparison Chart below for a snapshot of my findings.

Summary Comparison Chart

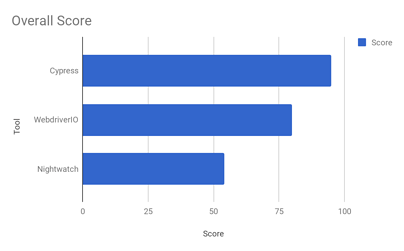

In addition, Cypress scored highest for the fastest setup time, with built in and added features that innovate UI testing (Cypress). See the chart below for the overall score across a broader range of categories.

Overall Scoring

After analyzing the scores, I confidently recommended the version of the framework which used Cypress since it stood head and shoulders above the rest in almost all of the categories.

Lessons Learned

I conducted headless tests towards the end of the project for versions of our framework which used WebdriverIO and NightwatchJS. I ran into SSL certificate issues and was unable to redirect the automated browser to our login page. In hindsight, I would have preferred to run headed and headless tests together to solve SSL certificate issues concurrently.

In addition, I learned how a tool like Selenium automates the browser. Everytime a Selenium command is run, for example click, an HTTP request is created and sent to a browser driver. Selenium uses webdriver to communicate the click command to a browser-specific driver. Webdriver is a set of APIs responsible for establishing that communication with a browser-specific driver like geckodriver for Firefox. Geckodriver uses its own HTTP server to retrieve HTTP requests. The HTTP server determines the steps needed to run the click command. The click steps are executed on the browser. The execution status is sent back to the HTTP server. The HTTP server sends the status back to the automation script.

Lastly, I learned about innovations in browser automation. For instance, Cypress uses a Node.js server process to constantly communicate, synchronize, and perform tasks. The Node server intercepts all communications with the domain under test and its browser. The site being tested uses an iframed browser which Cypress controls. The test code executes together with the iframed code which allows Cypress to control the entire automation process from top to bottom, which puts it in the unique position of being able to understand everything happening in and outside of the browser.

Conclusion

Building an automated UI testing framework can make your UI testing scalable, reliable, efficient, cost-effective, and less brittle. To do so requires casting a wide net during the research process, collaborating with team mates to determine needs, obtaining experienced guidance, building a few versions using recommended browser automation tools, and assessing each version using thoughtful categories. This cyclical process can be applied when building other frameworks.

Through this process, I determined that the version of our framework which used a non-Selenium based tool (Cypress) was the most performant. Browser automation tools that customized their API to improve Selenium (WebdriverIO) outperformed its counterpart (NightwatchJS). While we still perform manual UI tests across various browsers, our automated UI testing framework provides a means for scalable, efficient and robust testing. With our new automated testing framework in place, when the FE team deploys a new build, our new automated UI testing framework will determine whether the build is functional enough for our manual testers to execute tests allowing us to increase our UI testing efficiency.

Article Link: https://www.endgame.com/blog/technical-blog/how-we-built-our-automated-ui-testing-framework